Did you ever wish to physically depict the picture you have in your imagination? To be able to present it to other people not through the description, hoping that they will correctly decipher what you mean and reproduce it in their own heads, but directly – as if taking it from your imagination and putting it in front of everyone to see? Or maybe to just see the picture in your mind more clearly, to see it through real eyes, not just the figurative eyes of the imagination?

The technology that reads minds might still be beyond our reach but in the meantime, there’s an interesting alternative available. We are now living in an era of Artificial Intelligence models capable of generating high-quality images based on textual description alone. And this technology is free, open source, and available to everybody.

However, how did we get to this point? This article presents a brief history of image synthesis, providing a bird’s eye view of the key developments leading up to the current state.

Generative Adversarial Networks

Although the earliest attempts at generating images using AI date back to the 1970s, for decades there was little progress in that field. The available computing power and amount of data were limited, and the algorithms were too simple and rigid to handle more complex and realistic images. However, this situation began to change with the rise of deep learning and convolutional neural networks, which in turn provided the foundation for Generative Adversarial Networks (GAN).

GANs marked a significant breakthrough in the field of AI-generated images. This neural network architecture was developed in 2014 by Ian Goodfellow and his colleagues at the University of Montreal. It consists of two neural networks: a generator and a discriminator, trained in alternating periods. The generator network learns to generate synthetic data that mimics the distribution of real data, while the discriminator network learns to distinguish between the synthetic data generated by the generator and the real data.

The training process is iterative, with the generator attempting to produce synthetic data that can fool the discriminator, and the discriminator improving its ability to distinguish between real and fake data.

The end result is a generator that can produce synthetic data that is visually or audibly indistinguishable from the real one. This general approach can be used not only for image synthesis but also for other tasks, such as style transfer or data augmentation, and can be applied outside the visual domain, for example, in audio generation, like synthesizing music.

This approach has proven to be successful in generating high-quality pictures, and they have been used in a variety of applications, such as generating images of faces, landscapes, and objects. Moreover, GANs have been adapted for other tasks, including generating detailed images from rough sketches, transferring the style of one image to another, or replacing fragments of an image with a desired object.

Limitations of GAN technology

However, while GANs have demonstrated impressive results in generating realistic images, there are several limitations to this technology. One of these limitations is the unstable nature of the training process. The adversarial structure of the network can lead to mode collapse, where the generator network produces only a limited set of images that do not cover the entire range of possible outcomes, resulting in a lack of diversity in the output images.

Overfitting is another issue that GANs can be particularly susceptible to, where the generator network memorizes the training dataset instead of generalizing it to produce new images. Additionally, GANs can struggle to generate high-resolution images due to the computational complexity involved, often requiring a significant amount of time and computational power to train.

On top of that, while there are examples of text-to-image versions of GANs or models that do image-to-image translation, most implementations provide limited control over the generated output, making it challenging to produce specific objects or change the style of generated images without significant additional training or manipulation.

DALL-E

To overcome these limitations, researchers have continued to explore new techniques and architectures for generating images. One such example is DALL-E, developed by OpenAI and released on January 5, 2021. The model utilized Generative Pre-trained Transformer, which in turn is based on the earlier transformer model. Both were originally developed for use in natural language processing.

The Transformer model is a neural network architecture based on a self-attention mechanism. In traditional neural networks, each input element is processed independently, which can lead to difficulty in modeling long-range dependencies. The attention mechanism allows the model to selectively focus on different parts of the input sequence, enabling it to capture complex relationships between the words.

The Transformer model consists of an encoder and a decoder, both of which are composed of multiple layers of self-attention and feedforward neural networks. It is highly parallelizable and has achieved state-of-the-art results in many Natural Language Processing tasks.

Based on that architecture, Generative Pre-trained Transformers were originally developed in 2018, by OpenAI. The GPT works by training a large neutral network on massive amounts of text data, such as books, articles, and websites. The model uses a process called unsupervised learning to identify patterns and relationships in the data, without being explicitly told what the patterns are.

Once the model is trained, it can be used to generate new text by predicting the next word or sequence of words based on the context of the previous words in the sentence. This is done using a process called autoregression, where the model generates one word at a time, based on the probability distribution of the next word given the previous words.

The original GPT was scaled up in 2019 and then again in 2020, resulting in GPT-3 using 175 billion parameters. This model has become a foundation for DALL-E, which utilizes its multimodal implementation, using 12 billion parameters, which swaps text for pixels, rained on text-image pairs from the Internet.

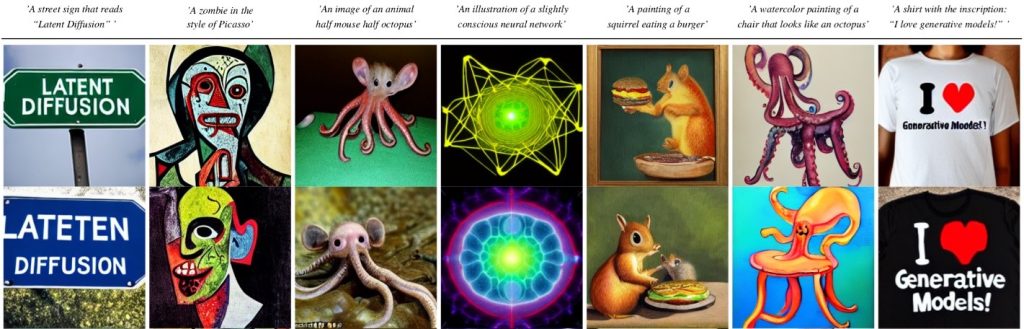

The DALL-E model has shown outstanding outcomes in multiple tasks of image generation, based on textual inputs. Besides simply generating sample pictures of various objects it has seen during training, the model has the skill of integrating different ideas and blending unrelated concepts in convincing manners, even generating objects that are improbable to exist in the physical world.

CLIP

Another important step in AI image synthesis, released along DALL-E, was a technique called Contrastive Language-Image Pre-training. CLIP is a model trained on 400 million pairs of text captions and images, with captions scraped from the Internet. It involves training a neural network model on both image and text data, with the goal of enabling the model to understand the relationship between the two modalities – how textual descriptions relate to the visual content of images.

During training, the model learns to encode the image and text inputs into a common vector space, where the similarity between the image and its corresponding text description is maximized, while the similarity between images and other irrelevant texts is minimized. This is done through a contrastive loss function that encourages the model to pull together image and text vectors that belong to the same image-caption pair and push apart the image and text vectors that do not belong to the same pair.

In DALL-E, CLIP has been used to rank generated images, by the accuracy of the caption, in order to filter the initial set of pictures and select the most appropriate outputs. Since then, CLIP became a popular element utilized in various image synthesis solutions, serving the role of a filter and a guide in the models’ image generation process.

The first open-source solution to utilize this was DeepDaze. Developed in January 2021 by Phil Wang, it combined CLIP with an implicit neural representation network called Siren. DeepDaze gained popularity for its ability to produce visually stunning and surreal images that often resemble dreamlike landscapes or abstract art.

BigSleep

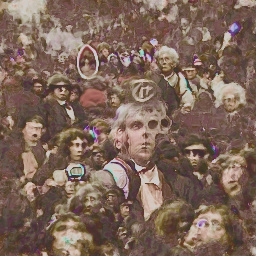

A few days later, the same developer, with the help of models released by researcher Ryan Murdock, developed another generative deep-learning model called BigSleep. The model works by combining CLIP with BigGAN, a system developed by researchers at Google that uses a variant of GAN architecture to generate high-resolution images from random noise vectors. BigSleep utilizes the outputs of BigGAN to find images that score high on CLIP. The model then gradually adjusts the noise input in BigGAN’s generator until the produced images match the given prompt.

According to Ryan Murdock, BigSleep was the first model that could generate a diverse range of concepts and objects with high quality at a resolution of 512 x 512 pixels. While previous work, although producing impressive results, has often been limited to lower-resolution images and more common objects.

The VQGAN-CLIP

BigSleep model inspired another CLIP-GAN combination. Just three months later, in April 2021, the VQGAN-CLIP model was developed by researcher Katherine Crowson. The VQGAN (Vector Quantized Generative Adversarial Network) is a variant of the Generative Adversarial Network architecture. It is capable of generating high-quality images by encoding images as discrete codebook entries, allowing for more efficient training and better image quality compared to traditional GAN models.

The VQGAN-CLIP uses the multimodal encoder of CLIP to evaluate the similarity of a (text, image) pair and feeds it back through the network to the latent space (i.e., the space of abstract image representations) of the VQGAN image generator. By iteratively repeating this process, the generated image candidate is adjusted until it becomes more similar to the target text.

The model allowed not only the generation of images but also provided the ability to perform manipulation on already existing images, by giving a textual description of the change to be made. This architecture has been an important development in text-to-image models, providing high-quality image generation and manipulation, semantic accuracy between text and image, and efficiency, even when generating unlikely content. Its open development and research approach has contributed to its fast real-world success, with non-authors quickly extending it to other modalities and commercial applications.

Diffusion Models

The line of research based on connecting CLIP with other architectures continued. In June 2021, the author behind VQGAN-CLIP released another work, combining the Contrastive Language-Image Pre-training model with the diffusion algorithm to create CLIP Guided Diffusion.

Diffusion algorithms are a family of probabilistic methods for picture generation, based on simulating the behavior of particles diffusing through a medium. Image data can be converted to uniform distribution by adding random noise. The diffusion algorithm slowly degrades the image structure by adding noise until there is nothing left but random noise.

In machine learning, the aim is to learn the process of reverse diffusion – finding the parameters for a process that iteratively transforms random noise back into a coherent picture. That reconstruction process is conditioned by some sort of guidance signal.

How does the algorithm work?

In general, the algorithm works like this:

- The algorithm initializes the data to start with a noise signal that is randomly generated – typically a Gaussian distribution with zero mean and unit variance.

- The noise signal is used as the initial state of the diffusion process, and particles representing the position of image pixels, are sampled from that initial distribution.

- The diffusion of particles is being simulated, modeled as a sequence of time steps, at each of which the particle positions are updated based on a probabilistic rule that model’s diffusion.

- The process is conditioned on a guidance signal – either image or text prompt.

- The signal is used to bias the diffusion toward generating an image matching the desired characteristics.

- Diffusion and conditioning steps are repeated iteratively for a fixed number of steps.

- As the process continues, the particles become more concentrated in regions that match the guidance signal.

- Once the diffusion process is complete, the final particle positions are transformed into an image using a neural network decoder.

- Optionally, additional post-processing steps are taken, such as color correction or denoising, to improve image quality.

In CLIP Guided Diffusion, the author used Guided Diffusion – an implementation of a diffusion algorithm made by researchers from OpenAI, guided by the signal provided by CLIP. In this architecture, the CLIP model is used to retrieve a set of images that match the text prompt to use them as guidance for the Guided Diffusion model. The user can also provide additional constraints on the desired image, such as the image resolution or the color palette.

Then, the Guided Diffusion model generates a sequence of noise vectors that are progressively refined to generate an image that matches the guidance provided by CLIP. The model iteratively updates the noise vectors by conditioning them on the previous noise vector, the guidance signal provided by CLIP, and a random diffusion noise. The resulting noise vectors are then transformed into an image using a neural network decoder.

Advantages and disadvantages of the CLIP Guide Diffusion

The CLIP Guided Diffusion has been shown to produce high-quality images with realistic textures and fine details that are difficult to achieve with other generative models. Perhaps what’s more important, it is also highly controllable, allowing users to generate images that match specific styles or characteristics specified in the text prompt. The model can be used for a wide range of image generation tasks, including image editing, image synthesis, and image manipulation.

This invention sparked an explosion of interest in image generation, spreading knowledge of the technology to an even larger audience. Crowson’s model was soon followed by Disco Diffusion – a popular Google Colab notebook that used CLIP Guided Diffusion to provide easy to use interface and a large number of customizable options. This tool quickly became popular among artists and AI enthusiasts who now received even easier and more convenient access to the technology previously only available to researchers at big tech companies.

One disadvantage of Diffusion Models is that they require a lot of computation to train and run but the next development was about to change that.

Technology development

In December 2021, a group of scientists at the CompVis group of LMU Munich University unveiled their spin on the popular technique – Latent Diffusion. This algorithm offered a more efficient alternative to classic Diffusion Models.

Rather than working with the original image, it ran the diffusion process within a compressed image representation known as the latent space. By operating in this space, the algorithm could reconstruct the image while minimizing the computational burden. Additionally, the algorithm was versatile in regards to the input data, capable of working with various types, including images and text.

First, the initial image is encoded into the latent space, where the most relevant information is extracted and represented in a smaller subspace. This process is analogous to down-sampling, reducing the data size while preserving important features. Then, the same process is applied to any conditioning inputs, such as text or additional images, which are merged with the current image representation using an attention mechanism.

This mechanism learns to optimally combine the inputs and conditioning inputs in the latent space. The merged inputs are then used as the initial noise for the diffusion process, which takes place in the latent subspace. Finally, the image is reconstructed using a decoder, which can be viewed as the reverse of the encoding step.

Latent Diffusion proved to be capable of generating high-quality images that were diverse and realistic. It allowed for the creation of samples ranging from simple and smooth to complex and detailed. It was a flexible and scalable model architecture, able to be trained on large datasets to improve its accuracy and generalization capabilities. It has become an important step in the development of text-to-image models.

AI Image Generation Fever

Text-to-image AI models have come a long way in just a few short years, but until 2022 they remained fairly limited. Many of the earlier models required lots of computing power to run, especially during training phase. This meant that they were often trained on smaller datasets, thus remaining of interest mostly to researchers. Some interesting technologies were available through Google Colab notebooks, allowing for easy code execution on hardware available through cloud service. However, this method still required some technical competency, limiting the popularity of the underlying technology.

That started to change in 2022, when new applications began to appear, providing convenient interfaces to models based mostly on some implementation of the Diffusion Model.

In March 2022, MidJourney, an independent research lab launched an open beta of its application, providing access to a high-quality, artistically styled image generation through a chatbot available via the Discord communication app. While the service requires payment for continuous usage, it allows new users to generate a limited number of images for free.

Shortly thereafter, in April, another big image synthesis web service launched. Previously available only to limited audience, OpenAI’s DALL-E 2 was opened to the public. Like MidJourney, it requires the purchase of credits to spend on generating pictures but offers a small amount of non-accumulable credits each month for free.

DALL-E 2 was created by training a neural network on an image, text description pairs. It can not only understand objects by combining textual descriptions with images, but it also comprehends relations between objects. Like many of the other state-of-the art models, it uses Diffusion algorithm in its image synthesis process.

DALL-E 2 utilizes CLIP to learn the links between textual semantics of an image description and their visual representations, encoding the image into representation space. Next, a modified version of one of OpenAI’s previous models, called GLIDE, is used to invert the image encoding process to stochastically decode the image embeddings created by CLIP. This helps create variants of the given input that, although different from the original image or text prompt, can still produce results that capture the defining features of the provided input.

This architecture surpasses its previous version in terms of photorealism and caption similarity. On top of performance achievements, it also offers additional features besides simple text-to-image synthesis, such as:

- inpainting (generating specific objects and combining them into a larger picture),

- outpainting (creating visually consistent extensions of input image),

- creating variations of input images.

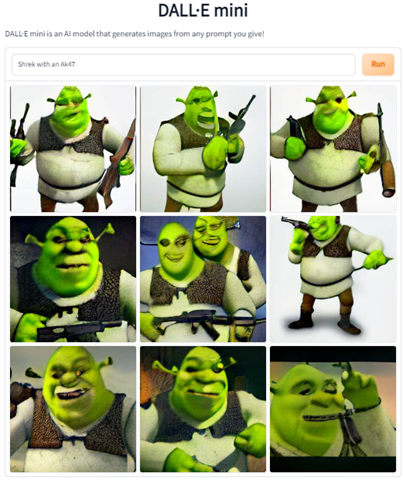

It is also worth mentioning an open-source implementation of DALL-E called Craiyon (formerly DALL-E Mini, renamed due to legal dispute with OpenAI), made by an independent developer and Machine Learning Engineer Boris Dayma. It could not match the quality of original DALL-E architecture but it has gained some popularity outside the AI community through internet memes due to its often-goofy outputs.

Besides the two big applications, the first half of 2022 saw the release of many other interesting projects. In May, Google announced its Imagen image generation system, which could compete with DALL-E, and in June publicized Parti, another text-to-image model. Microsoft’s publications on their approach to image generation – NUWA and NUWA Infinity – are also noteworthy. Each of these architectures brought interesting results and variations on previously developed techniques. Sadly, none of them are available for testing to the public at large and their detailed description is outside the scope of this article.

The rise of Stable Diffusion

This brings us to July 2022 and the release of a model that arguably garnered the most popularity – Stable Diffusion. The model was developed by the same people behind previous work on Latent Diffusion. Thanks to a bigger training dataset and improvements to the model’s design structure, it surpasses its predecessor in terms of both image quality and range of capabilities. Stable Diffusion conditions image synthesis using frozen CLIP ViT-L/14 text encoder merged into the model’s architecture and trained on LAION-2B dataset, that includes 2.32 billion image-text pairs in English.

Despite its size, this technique is relatively lightweight after training, with 860 million parameters for the UNet model and 123 million parameters for the text encoder (compared to, e.g., 3.5 billion parameters for DALL-E 2). Initially, it could be run on a GPU with at least 10 GB VRAM while the latest optimizations cut down the amount of required memory to just 6GB, with some people reporting successful inference on Nvidia cards with just 4 GB of VRAM.

While directly comparing Stable Diffusion with DALL-E 2 and MidJourney, the immediate results may sometimes seem inferior. The free access and open-source nature of Stable Diffusion meant that with some judicious engineering, the state-of-the-art synthetic images are easily available to everyone.

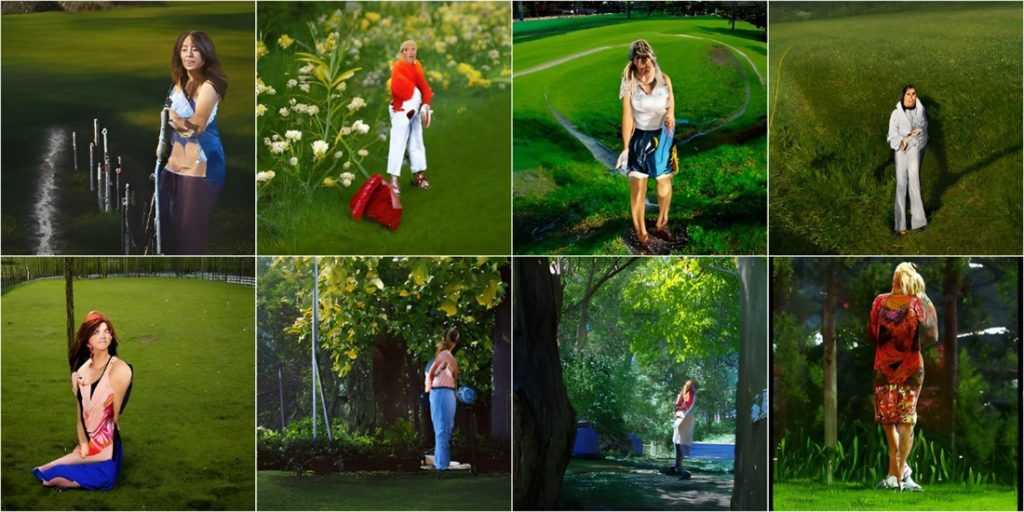

Furthermore, the flexibility and scalability of the architecture allowed the original model to be modified by fine-tuning it on smaller image datasets, producing specialized models for generating pictures in specific styles and of desired scenes, objects, and people. The new techniques, such as Dreambooth and LoRA, simplify the process, allowing relatively quick fine-tuning and adding individual concepts to the model using small data sets of up to a few dozen images. Thanks to that, the interest in Stable Diffusion has exploded since its release.

As of now, there are multiple ways of running the model, including web applications, Discord chat bots and Google Colab Notebooks, to name only some of the cloud-based possibilities. Additionally, there are numerous free and open-source applications providing user interface for the model, allowing everyone to easily use its numerous features on their own hardware. With new features and additional models provided by both other researchers, as well as community of independent developers, Stable Diffusion opened the floodgates of creativity, ushering the new age of freely and widely available AI powered image generation.

Summary

The history of AI-powered image synthesis is and exciting journey spanning decades of research from all over the world. It is a tale full of hard scientific research, collaboration and unexpected inventions. From early procedural algorithms, through Generative Adversarial Networks, the cross-domain synthesis of natural language processing and image generation in CLIP and GPT, to breakthroughs provided by Diffusion Models, this technology is a testament to the speed with which progress can be achieved.

All that is needed is for scientists and engineers to freely and openly use each other’s work, constantly building upon previous achievements and ever pushing the boundaries human ingenuity.

With its massive disruptive potential this technology has, it already made both dedicated supporters and ardent enemies. Many graphic designers and artists feel outraged that their work is being used to train AI models that could undercut their profession, and so they have decided to protest or even sue the companies behind those solutions. Still, other organizations are actively working on integrating the technology into their processes and building new solutions powered by it.

How exactly this controversy will unfold itself is yet unknown but one thing is certain: AI-driven image synthesis has the potential to revolutionize art, industry… and perhaps the entire society.

***

If you are interested in the topic of AI, check out other articles by our experts.

Leave a comment