Nowadays, Artificial Intelligence is more present in our lives than ever. Everything thanks to more computational power that resulted in the popularity and affordability of Deep Learning.

DL allowed us to leverage a lot of fields of study like Computer Vision, Natural Language Processing, Optimal Control, Timeseries Forecasting or Generating Synthetic Data. There are various methods that can help solve these tasks but among them is one extraordinary algorithms family that can be applied to pretty much every problem – (Deep) Reinforcement Learning.

What is Reinforcement Learning?

Reinforcement Learning is the field of study in Artificial Intelligence that is trying to find optimal action/sequence of actions in any environment based on trial and error. An example of such would be a robot learning to play with the cart pole.

A short history of Reinforcement Learning

Origins of Reinforcement Learning come from the 1950s when researchers tried to solve the problem of finding optimal actions that will maximize certain objectives (like trying to win a game of chess). Three main threads of interest were:

- Learning through trial and error e.g., animal learning (involves learning) – exploring the environment and trying to find out what is beneficial.

- Finding optimal control in a known environment e.g., dynamic programming, Model Predictive Control (didn’t involve learning) – based on pieces of information about environment’s dynamics/changes finding best actions.

- Temporal-difference learning (involves learning) – in every learning step changing strategy slightly (accordingly to present results) in order to converge to true optimality.

The first thing that we can notice here is that in the first case it’s more about psychology while the second and third case applies to calculations/programming. At that time there was not a lot of connection between the three of them but with the rise of Q-Learning (1989) it changes.

Q-Learning made it through thanks to some clever conceptions:

- Agent assessed the current situation, and possible actions and then chose the action with the best-predicted outcome.

- After trying action in a certain situation, assessment could be reinforced or weakened based on the outcome (sometimes the outcome could come even after several other actions).

These two simple rules could bring together the theory behind these three early Reinforcement Learning threads. Elements of these are listed below:

- Learning through trial and error – changing preferences of actions in certain situations based on the outcome. To find good actions, the agent must explore the environment by executing sequences of actions using certain strategies.

- Finding optimal control – thanks to updates of preteritions agent learns to maximize certain objectives.

- Temporal-difference learning – agent is changing preferences only a bit because the outcome of action might come from a bad later decision.

An extension of this was Deep Q-Learning created by DeepMind (2013) which thanks to Deep Learning could improve its efficiency by a lot. After that year more Reinforcement Learning methods based on neural networks emerged.

The theory behind Reinforcement Learning

There are three most important conceptions in Reinforcement Learning:

- Markov Decision Process

- Q-value

- Bellman equation

- Markov Decision Process is a graph where each node is a state of the system, and each action can move an agent between nodes. A good example is imagining a simplified graph of the day at work:

We have three states, and, in each state, we can choose one of three actions. Every action in a certain state is associated with some reward. Our goal is to get as much reward as possible. It might be visible that working for the entire day might not be the optimal solution, sometimes we also must take a break and even talk with people!

- Q-value is the value associated with pair state-action that connects immediate rewards and future ones. In other words, the same actions in different states can have different values based on the present and future profits that executing them gives. When we would like to introduce Q-values in the above graph we should add one more variable that using Bellman equation would converge to some true values.

- Bellman equation is something that can help us find an optimal working day schedule. We can present it as:

Q(s, a) = reward + γ*Q(s’, a’)

Where:

S – current state

S’ - next state

A - Action

A’ - next action

Q(s, a) – q-value, it is used to estimate profitability of certain action in certain situations.

Reward – a reward for taking certain action in a certain state.

γ – discount factor (future rewards are uncertain things so their impact is lowered).

The idea standing behind this equation is quite easy. The value of actions depends also on future possibilities that executing them might bring to us. We simply connect all actions all together to make our values represent the present and future. The discount factor is introduced to make the future’ less important. Of course, depending on the situation γ can vary.

In simple cases, we can just use dynamic programming to find true q-values by creating a 2D array of states’ transitions when each entry will be a q-value. Then we can use some strategy or randomly move across the next states and apply Bellman equation to update entries.

Harder tasks like when we have thousands of states and thousands of actions require the use of Neural Networks.

Methods and application in real life

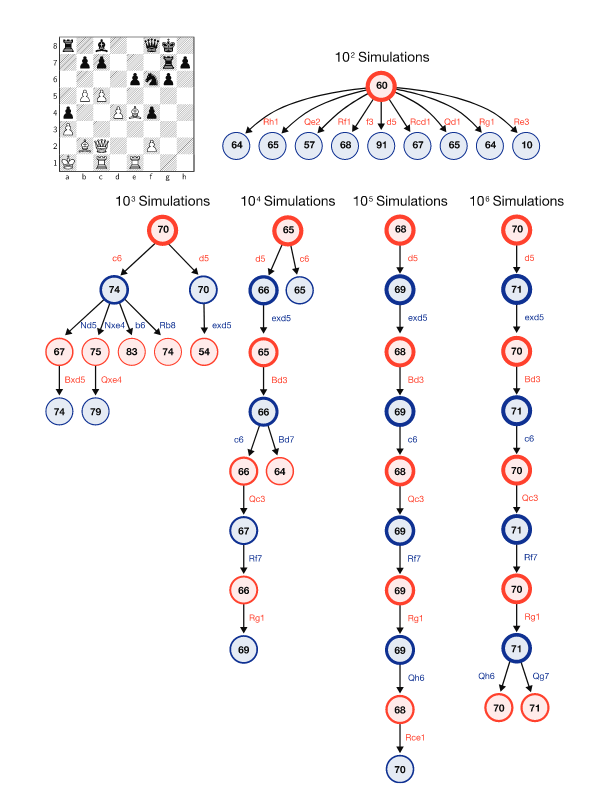

Probably the most well-known chess algorithm besides Stockfish is AlphaZero which is a Deep Reinforcement Learning algorithm. By playing with itself it could achieve super-human effectiveness that beat the best chess engines of that time but what is actually the secret of this agent?

In AlphaZero there are two agents: one is choosing actions using a certain strategy and the second one is assessing their’ Q(s, a). The agent is simulating a few paths (possible game continuations) using a certain strategy and then gives them a score using Q(s, a). Updating evaluations depended on whether the algorithm could win or not. Thanks to this combination it could achieve superior results in only 9 hours of learning!

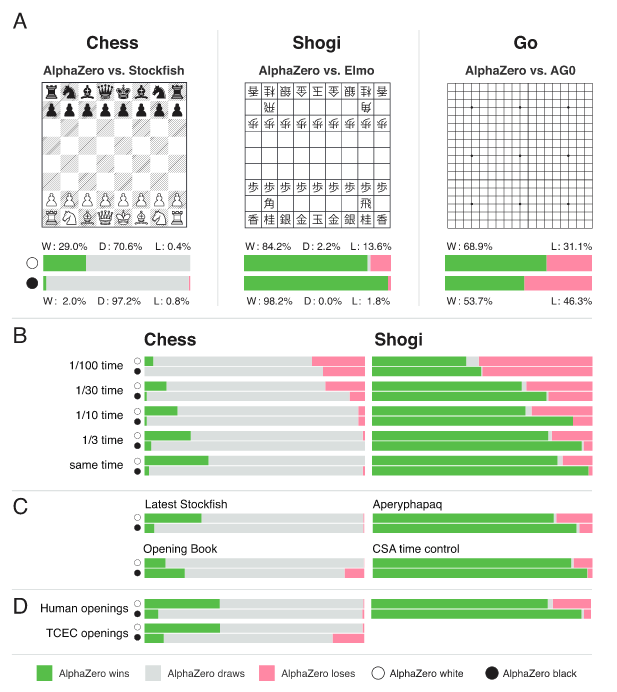

DeepMind in their paper also provided results of comparing with different state-of-the-art algorithms in the game of chess, Shogi and Go:

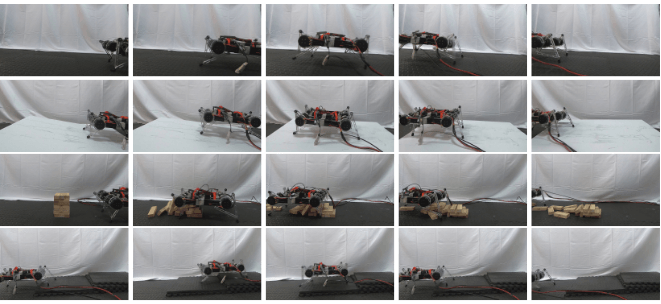

More information you can find in Original AlphaZero blog post. Another interesting example is shown by researchers in the paper is that they managed to learn real life robots to walk on 4 legs.

The algorithm they used is called Soft-Actor-Critic and besides trying to find true Q(s,a) function it adds bonus for entropy during decision that helps with exploration. Higher entropy means higher indecision of actions.

Paper claims that agent trained in that way could deal with unknown situation like having obstacles in their way or slippery path.

The results are shown as photos:

Besides these two examples RL there are various others like NLP (Natural Language Processing) where it can be used in text summarization, machine translation, or chat bots.

Next usage can be found in trading and finance for predicting time series.

Also, for recommendation algorithms we can use RL instead of simple clustering. In that case rewards are reviews and likes over some content so this task fits Reinforcement Learning framework perfectly.

A very recent study also showed that we can apply these algorithms for video compression where we can beat human-made algorithms by 4%! For interested there is great explanation on YouTube:

For people that want to know more, there are great blog posts on Neptune and Oreilly.

Summary

Slowly we can see more and more Reinforcement Learning emerging in our daily life. Every aspect of technology can be improved by using Reinforcement Learning. Trial and error framework is an enormously powerful tool that can help in every task where we can assess the final results.

***

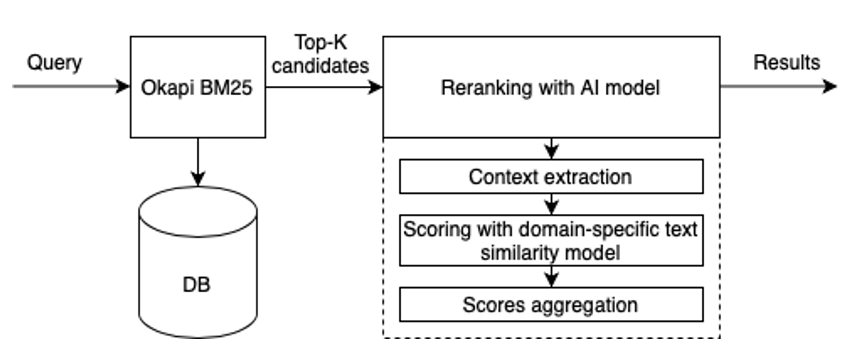

If you are interested in Artificial Intelligence, read other articles from our experts: Specialized Semantic Search Engine, RPA and intelligent document processing, and AI-powered Search Engine.

Leave a comment