Among many tools that are already available and those constantly emerging in the dynamic IT world, Testcontainers has drawn my attention already in 2022.

I would like to introduce you to what challenges and problems can be solved by adding this option to your workshop. It won’t be an article solely praising the tool, because – in addition to its obvious advantages – I also want to point out its disadvantages. I will, however, forewarn my final verdict – I am a Testcontainers proponent and have already located it in my test frameworks for good.

Docker for a manual tester

If you’ve dealt with databases, you probably recall your own effort to prepare a DB working environment “from scratch”. Even if it’s just a local environment, you need to have a DB driver and client, as well as set everything up properly, creating a valid connection string. This is especially important in case of Oracle databases.

It all can be done much more efficiently using Docker. Docker’s central image repository contains an ever-expanding database of images, including Oracle database images. The manufacturer presents its official images, but other developers also provide their own versions. In short – we have all versions of almost any tool in one place.

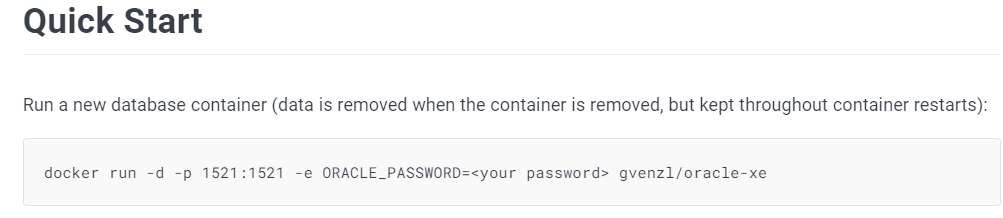

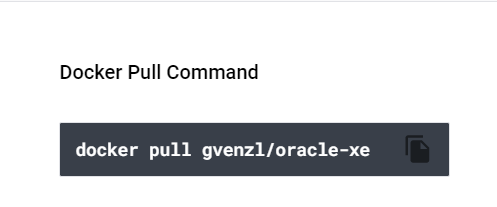

I am choosing an Oracle version, e.g. 9, represented by a Docker tag, and can apply a command that downloads the image to a local disk:

The benefit is significant, because what will appear on our disk in the form of a docker image [image] already contains everything we need to use the database. The next command [docker run], which will start the container, will make the database available for work.

In the description of a given image, the author usually provides already prepared commands in various versions. All you need to do is run them locally in the Windows console.

Advantages and disadvantages of the solution

I’m not saying that handling Docker is trivial, but I’m putting forward a thesis that once someone tries it, they won’t go back to the demanding installations and tedious configurations of desktop versions.

There’s also significant saving in disk space. Installation of a native tool almost always takes up much more disk space than a Docker image.

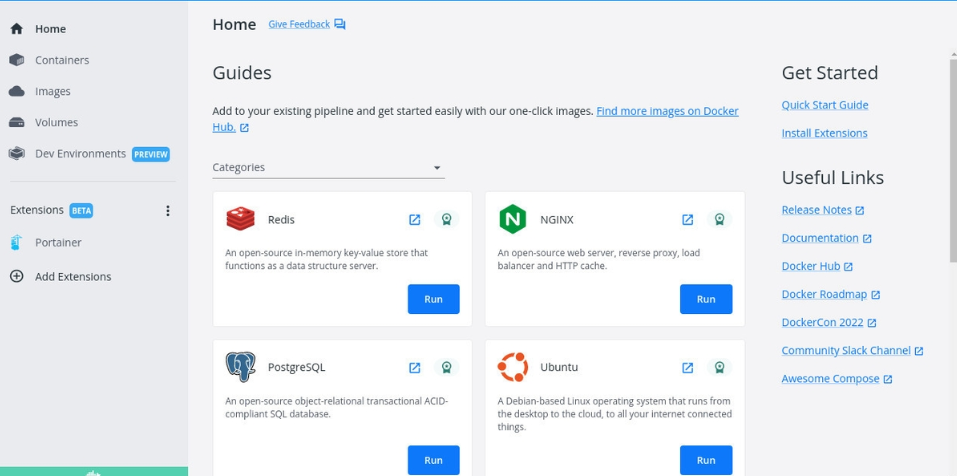

Downsides and inconveniences include the one-time installation of Docker itself. It is a console tool, but on Windows you can use Docker Desktop, which allows you to manage Docker images and containers transparently.

It must also be admitted that we need to master the console commands, which will allow us to move in Docker freely. However, this is mainly a time effort, because I confidently say that free knowledge sources on the subject available on the web are sufficient and there is no need to invest in paid trainings in case of a manual tester.

Docker for an automation tester and developer

Developers have already come to appreciate Docker and are taking full advantage of it. We launch a container with any application instantly (a matter of milliseconds), use it and close it just as quickly. When saving the state of an application doesn’t matter to us, it is completely seamless. There is also no trouble if communication is needed between the local disk and the container, e.g. by sending a file to the container or receiving a file from the container to the disk.

It is also possible to run multiple containers at the same time and connect them into one network.

Testcontainers

However, there is a challenge for testing applications that use containers, especially those based on microservice architecture. Integration testing is a phase during which we want to test the joint operation of different system components, e.g. Rest API and the database, or the microservice that produces messages for Kafka and consumes these messages along with the Kafka broker.

When preparing automated integration tests for such an architecture, it is necessary to use a database container, e.g. PostgreSQL or a queue, e.g. Kafka. This container must be run in the code.

This is where Testcontainers comes to the scene. It is a library that provides API for running Docker containers in the code. The tool supports the following programming languages:

- Java,

- Go,

- .NET,

- Python,

- Node.js,

- Rust.

The library provides automatic management of starting and closing containers as soon as the test is completed. It is an extremely “aesthetic” approach. The environment of the tested application is built relatively quickly (about 2-3 extra seconds) automatically just before the test. Then the logic of our scenarios is executed and the result returned, and finally the container disappears irretrievably.

This “ephemerality” for an automation tester is extremely attractive. Testcontainers provides a mechanism for preparing test data “on the fly”. Also, the need to clean up the state after a test, for the sake of ensuring that artifacts and left-over data do not inadvertently affect subsequent executions of the same test, disappears. Here, each test run has its own “fresh” component instance, which disappears after the test, along with all its contents.

Testcontainers/ryuk

The object that manages closing selected containers is “testcontainers/ryuk“. It always starts, even though we do not explicitly declare it in the code. It is a privileged container that is responsible for the correct and automatic closure of containers [automatic cleanup].

This mechanism is of great importance, especially when we stop the execution of tests in a non-standard way, for example:

- an exception occurs during the scenario execution,

- the full test environment couldn’t be run because one container was missing,

- during debugging we close the test from the IDE level.

All these and similar situations force the closure of running containers, to not leave “dead containers” in the memory. Although the library’s documentation allows your own ryuk configuration, or even disabling it, it is not recommended.

Testcontainers for JAVA language – demo

I will present a practical example of Testcontainers usage in a Java code. I want to use a PostgreSQL database as a component during [Controller] testing in a simple microservice. I have chosen Micronaut framework, but it would work just as well with the traditional Spring Boot.

The service is a classic CRUD – it allows reading, saving, editing and deleting Actor entities to the database. It contains division into layers:

- Controller,

- Service,

- Repository,

In Micronaut, such model class will look as follows:

package com.example;

import io.micronaut.core.annotation.Nullable;

import io.micronaut.data.annotation.GeneratedValue;

import io.micronaut.data.annotation.MappedEntity;

import io.swagger.v3.oas.annotations.media.Schema;

import javax.persistence.Entity;

import javax.persistence.GenerationType;

import javax.persistence.Id;

import javax.validation.constraints.NotBlank;

import javax.validation.constraints.Size;

@Schema(description="Actor business model")

@Entity

public class Actor

{

@GeneratedValue @Id

@Nullable

private Long id;

@NotBlank @Size(max = 20)

private String firstName;

@NotBlank @Size(max = 20)

private String lastName;

@NotBlank

private Long rating;

//getters and setters

}

[src/main/java/model/Actor]

I have defined DataSource in the configuration file application.ymk for Postgres, which looks as follows:

datasources:

default:

url: jdbc:postgresql://localhost:5432/actor

driverClassName: org.postgresql.Driver

username: postgres

password: postgres

schema-generate: NONE

dialect: POSTGRES

schema: public

When the database with the right schema is running, everything works flawlessly. I can run my API tests written, for example, in REST-Assured. However, if I disable the database, no test will give reliable results. Testcontainers will allow me to “turn on” the missing component only for the time of running my tests.

The first step is to download the dependencies to the project:

Maven:

<dependency>

<groupId>org.junit.jupiter</groupId>

<artifactId>junit-jupiter</artifactId>

<version>5.8.1</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.testcontainers</groupId>

<artifactId>testcontainers</artifactId>

<version>1.17.6</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.testcontainers</groupId>

<artifactId>junit-jupiter</artifactId>

<version>1.17.6</version>

<scope>test</scope>

</dependency>

Gradle:

testImplementation "org.junit.jupiter:junit-jupiter:5.8.1"

testImplementation "org.testcontainers:testcontainers:1.17.6"

testImplementation "org.testcontainers:junit-jupiter:1.17.6"

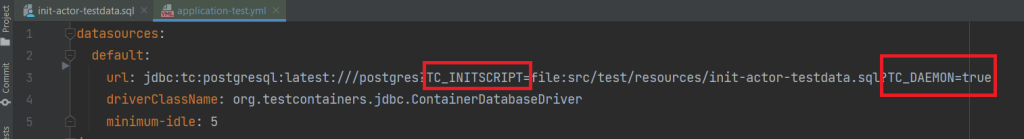

I then define the DataSource at the test level. In Micronaut, the application-test.yml configuration file is responsible for it:

datasources:

default:

url: jdbc:tc:postgresql:latest:///postgres?TC_INITSCRIPT=file:src/test/resources/init-actor-testdata.sql?TC_DAEMON=true

driverClassName: org.testcontainers.jdbc.ContainerDatabaseDriver

minimum-idle: 5

Note that the url parameter is marked with tc – [jdbc:tc] – indicating that its operation will be handled by Testcontainers. Additionally, driverClassName also contains an indication of the org.testcontainers package.

Now we can mark in the test class code that we will use Docker containers with annotation @Testcontainers before the class.

@Testcontainers

@MicronautTest(environments = "test")

@Slf4j

public class JdbcTemplateActorTest {

}

[src/test/java/ JdbcTemplateActorTest]

And with @Container annotation before a field, which defines the type and version of the container:

@Testcontainers

@MicronautTest(environments = "test")

@Slf4j

public class JdbcTemplateActorTest {

@Container

private static final PostgreSQLContainer<?> postgres = PostgresContainer.getContainerPostgres();

[src/test/java/ JdbcTemplateActorTest]

The other annotations left are:

- @MicronautTest(environments = “test”) – a Micronaut annotation to indicate that we will be using the Micronaut test context.

- @Slf4j – Lombok annotation that starts the logger in a class.

The PostgreSQLContainer class provides us with proper operation of this detailed PostgreSQL container. Moreover, there is a generic class GenericContainer, which we can always use to store the object of any container:

@Container

private static final GenericContainer<?> postgres = PostgresContainer.getContainerPostgres();

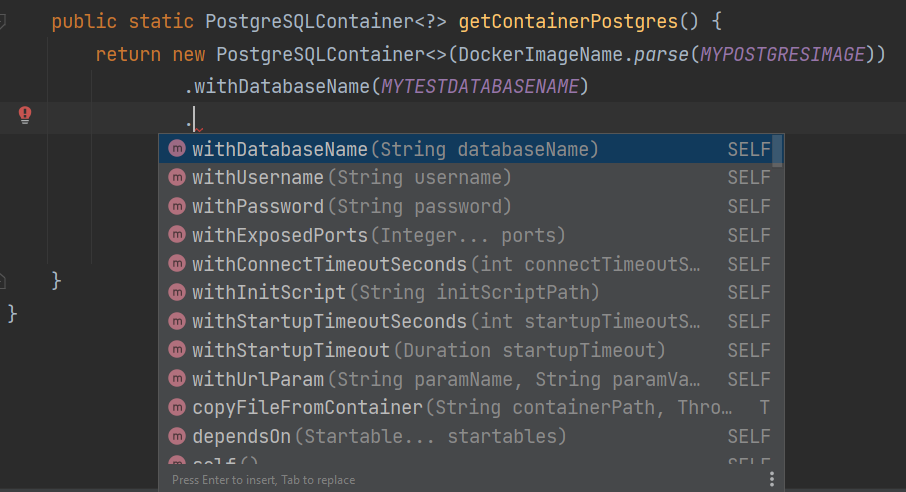

The Testcontainers library requires that we add the absolute minimum and necessary configuration of our container. After all, we have to choose which postgres image we need, define the schema name, username and password.

Personally, I prefer to put such configuration not in the test class, but in a separate class responsible for postgres containers. It makes sense because postgres is not the only component we could potentially need. When Kafka, Redis or MongoDB appear, we will safely separate all these configurations.

The code for such class might look as follows:

package com.example.containers;

import org.testcontainers.containers.PostgreSQLContainer;

import org.testcontainers.utility.DockerImageName;

public class PostgresContainer extends PostgreSQLContainer<PostgresContainer> {

private static final String MYPOSTGRESIMAGE = "postgres:latest" ;

private static final String MYTESTDATABASENAME = "actor";

private static final String USERNAME = "postgres";

private static final String PASSWD = "postgres";

private static final Integer DB_PORT = 5432;

private PostgresContainer() {

super(DockerImageName.parse(MYPOSTGRESIMAGE));

}

public static PostgreSQLContainer<?> getContainerPostgres() {

return new PostgreSQLContainer<>(DockerImageName.parse(MYPOSTGRESIMAGE))

.withDatabaseName(MYTESTDATABASENAME)

.withExposedPorts(DB_PORT)

.withUsername(USERNAME)

.withPassword(PASSWD);

}

}

[src/test/containers/PostgresContainer]

The static method – getContainerPostgres() is the most relevant here. At this place with the use of multiple methods beginning with “with” we set the state of our container and ways of connecting to it.

The string mode of calling these methods adds tremendous convenience:

Now all that is left is to transfer the container connection details with the database to the test DataSource. Together with the Micronaut @MockBean annotation, it is very simple and in the test class can look as follows:

@Testcontainers

@MicronautTest(environments = "test")

@Slf4j

public class JdbcTemplateActorTest {

@Container

private static final PostgreSQLContainer<?> postgres = PostgresContainer.getContainerPostgres();

private DataSource postgresDataSource;

private JdbcTemplate jdbcTemplate;

@Inject

public JdbcTemplateExampleTest(DataSource postgresDataSource) {

this.postgresDataSource = postgresDataSource;

}

@MockBean(DBConnector.class)

DBConnector postgresConnection() {

PostgresTestContainer dbConnection = new PostgresTestContainer();

dbConnection.setUrl(postgres.getJdbcUrl());

dbConnection.setUsername(postgres.getUsername());

dbConnection.setPasswd(postgres.getPassword());

return dbConnection;

}

[src/test/java/ JdbcTemplateActorTest]

Visible in the class bean JdbcTemplate served me only as a connector to the database. Thanks to it we’ll know that the database will actually show up when we need it, because we’ll be able to execute our queries and receive the results.

When everything is prepared I can implement two simple tests:

- Test 1 – shouldGetAllActorsFromDBBasedOnTestContainers() – retrieve all the actors from the database – “SELECT * FROM actor”;

- Test 2 – shouldGetSingleActorFromDBBasedOnTestContainers() – retrieve one actor from the database – “SELECT firstname FROM actor WHERE id=1”;

@Test

void shouldGetAllActorsFromDBBasedOnTestContainers() {

jdbcTemplate = new JdbcTemplate(postgresDataSource);

await().atMost(10, TimeUnit.SECONDS)

.until(this::isRecordLoaded);

var dbResultsSize = this.getLoadedRecords().size();

var dbResults = this.getLoadedRecords();

assertThat(dbResultsSize).isEqualTo(3);

assertThat(dbResults.get(0).get("firstname")).isEqualTo("Brad");

assertThat(dbResults.get(1).get("firstname")).isEqualTo("Angelina");

assertThat(dbResults.get(2).get("firstname")).isEqualTo("Salma");

}

@Test

void shouldGetSingleActorFromDBBasedOnTestContainers() {

jdbcTemplate = new JdbcTemplate(postgresDataSource);

await().atMost(10, TimeUnit.SECONDS)

.until(this::isRecordLoaded);

var dbResultsSize = this.getLoadedRecords().size();

var dbResults = this.getSingleRecord();

assertThat(dbResultsSize).isEqualTo(3);

assertThat(dbResults).isEqualTo("Brad");

}

private boolean isRecordLoaded() {

return jdbcTemplate.queryForList("Select * from actor").size() > 1;

}

private List<Map<String, Object>> getLoadedRecords() {

return jdbcTemplate.queryForList("Select * from actor");

}

private String getSingleRecord() {

return jdbcTemplate.queryForObject("Select firstname from actor where id=1", String.class);

}

[src/test/java/ JdbcTemplateActorTest]

Results

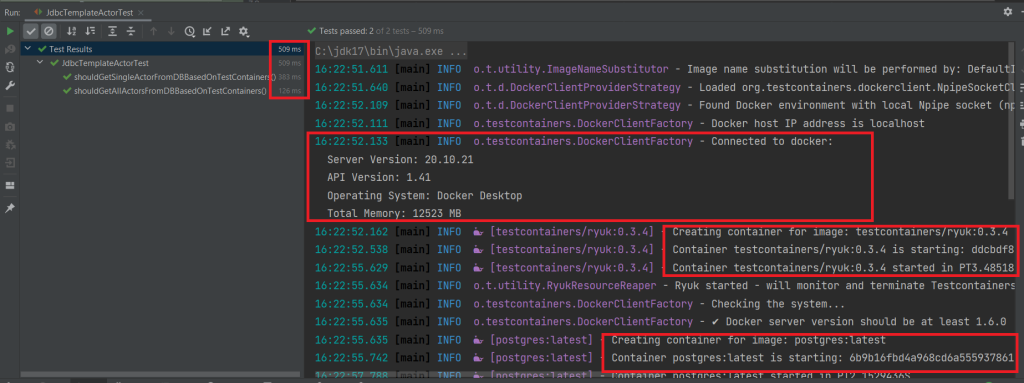

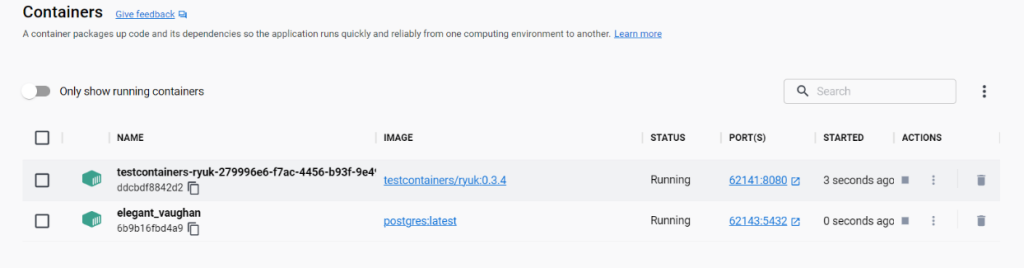

Here are the results of a test class run locally in the IDE. Note how the logs in the console clearly indicate that:

- Docker started,

- the privileged Testcontainers/ryuk container started,

- the PostgreSQL container started.

And most importantly, the execution time of both tests is only 0.5 sec!

Additionally, to confirm, I am adding a view from Docker Desktop at the time of test execution. You can clearly see that the needed containers are in the running status:

You can see how Testcontainers allowed me to implement whichever integration tests with minimal effort. The necessary components “get up” as Docker containers, and after the test is executed, the components are deleted.

There is one other issue that I find extremely useful in Testcontainers. These are options (flags) that I define in the configuration file application-test.yml:

- TC_INITSCRIPT

- TC_DAEMON

TC_INITSCRIPT

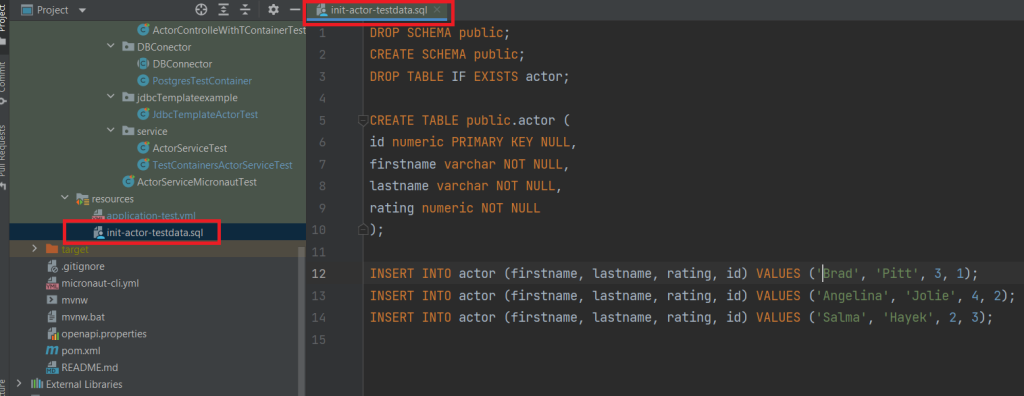

It is understandable that when we use a database, we would like the schema, tables and some test data necessary to execute the test to already be there. Thanks to the TC_INITSCRIPT option, we can define an SQL script, which will run as soon as the DB container is started, but before the first line of code is executed.

In my demo, I used the following script, so I didn’t have to handle the contents of my PostgreSQL database in the test code:

TC_DAEMON

In the default setting, the database container is stopped after the last connection is closed. However, there are times when we will want the container to run until it is explicitly stopped or the JVM is turned off. To do this, add the TC_DAEMON parameter to the URL, as in the graphic above.

Summary

Testcontainers is a library for handling Docker containers in the code. It is perfect for creating ephemeral components in automated integration tests. It also allows you to load SQL scripts in such a way that the container database is already equipped with the schema and data necessary for testing.

While working with Testcontainers, I noticed two inconveniences:

- you need to have experience working with external Java libraries. Individual implementation is not trivial, as Docker itself is non-trivial. The intellectual entry threshold is therefore noticeable, but I can clearly see the effort of this library creators to make the job as simple as possible for developers,

- after working in Testcontainers all day and running integration tests repeatedly, I notice that Docker, especially Docker Desktop on Windows OS, can consume a large amount of computer resources (RAM, CPU). Many times the containers do not “get up” and the test ends with an “Initialization error”. We can then configure a range of dedicated resources for Docker in the .wslconfig file. When the container initialization error recurs, the most effective way is to simply restart the computer.

***

If you are interested in the subject of Docker, we encourage you to read a series of articles prepared by our expert (PL):

Leave a comment