ChatGPT is a sensational new chatbot technology that has taken the internet by storm. It is a cutting-edge AI-based chatbot that can interact with humans using natural language processing (NLP). It uses machine learning algorithms to learn and understand human language patterns and adapt to different conversation styles.

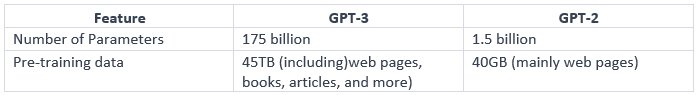

The core of ChatGPT is constituted by GPT-3 – the third iteration of the generative pre-trained transformer model. It is significantly larger and more powerful than its predecessor and comes with 175 billion parameters compared to 1.5 billion parameters in GPT-2. The additional parameters bring outstanding capabilities of text comprehension and generation.

In addition, GPT-3 was trained on a broader range of data, including books, articles, and websites, whereas GPT-2 was trained mainly on web pages. These factors have contributed to ChatGPT’s superior performance and make it the most advanced and capable chatbot technology.

However, what brings ChatGPT conversational capabilities to the next level is learning from human feedback through so-called reinforcement learning – an AI technique where an agent learns by receiving feedback in the form of rewards or penalties.

As a finetuning step, users interact with ChatGPT, to provide it with feedback in the form of a reward or penalty, depending on the quality of its responses. This helps ChatGPT learn and adapt to the user’s preferences over time and mimic human language very accurately.

The aforementioned capabilities have made it a popular choice for creating blog posts, songs, poetry and many more. However, despite its advanced capabilities, ChatGPT comes with certain limitations.

Limitations of ChatGPT

One of the major issues is that it can sometimes generate “hallucinations” or incorrect responses that do not follow the “truth”. The hallucinations arise because of the nature of the large language model – they can generate responses that are not grounded in reality. This can happen when the model does not have enough information to generate a valid response or when it relies too heavily on its own internal biases.

Another limitation of ChatGPT is its inability to handle custom datasets. The model is pre-trained on a vast amount of text data, but it cannot be extended or limited to work with just a specific data source. This can limit the chatbot’s effectiveness in business use cases where domain-specific knowledge is essential.

While ChatGPT has the potential to revolutionize the way we interact with chatbots, its limitations make it impossible to use in critical applications. However, it’s still possible to build an accurate system with outstanding conversational capabilities.

A domain-specific search engine

As we know from above GPT-3 is pre-trained on the “entire internet” and it’s working well for general-propose questions. But what about domain-specific tasks like legal or pharmacy? Is it possible to build a highly accurate system relying on pre-trained Large Language Models?

The answer is yes, and it might be achieved by applying a hybrid approach combining semantic search engine and/or Question-Answering. These two technologies can work together to provide more accurate, compute-efficient search results.

The goal of a semantic search engine is to retrieve documents that are semantically related to the query rather than simply matching specific query keywords. In other words, a semantic search engine can understand the meaning and intention of the queries and match them with relevant documents.

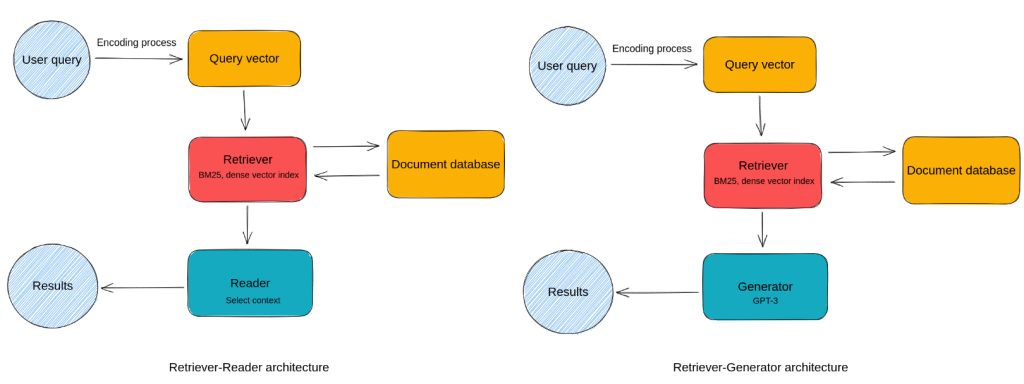

Meanwhile, question answering extracts the answer from the identified text passages. Modern Question Answering systems are usually based on the retriever-reader (with ranker) architecture.

Retriever

Retriever is responsible for retrieving relevant text passages for a given query containing the answer. There are two kinds of retrievers:

- sparse – use word frequencies to construct sparse vectors and on methods like TF-IDF or BM25,

- dense – requires constructing and training a deep neural network to embed both documents and queries in the same semantic space. However, it’s also capable of capturing the user intentions and meaning of the queries, rather than relying solely on the keywords.

On the other hand, the reader is responsible for extracting answers from the text passages provided by the retriever by selecting a text span with the exact answer.

However, such a system still doesn’t come with conversational capabilities and requires one more modification, such as the one described in the Retrieval-Augumented Generation.

Custom ChatGPT

Information Retrieval + Text Generation = Retrieval-Augmented Text Generation

Retrieval-augmented generation (RAG) models combine pre-trained dense retrieval and text generation models. In other words, found text passages are passed to a language generation model to enable conversation-like interactions. The above concept was introduced in “Retrieval-Augumented Generation for Knowledge-Insensitive Tasks” (2020) by Patrick Lewis et. al.

To simplify the idea, imagine that you are looking for some particular concept using your favourite search engine. However, it appears there is no single webpage answering your query, but rather you need to collect information from multiple sites by clicking on links and comprehending the information and synthesizing the answer by yourself. That’s literally what RAG’s approach does.

To sum up, current the above approach provides more accurate answers, while still preserving great conversational capabilities. The RAG method modifies the retriever-reader architecture by incorporating a generation component that can deliver an experience similar to speaking with a human expert.

Summary

In Sii, we have an extensive experience in semantic search engines and information retrieval systems. Currently, we’ve been working on benefiting from RAG’s approaches in order to push our search solutions one step forward and enable human-like interactions. But unlike ChatGPT, our solutions are free of the issue of hallucinations and provide highly accurate answers.

***

If you are wondering if ChatGPT can be helpful in testing, check out our expert’s article.

Leave a comment