The medical industry is a highly regulated environment. Every activity needs to be properly documented and the manufacturer of the Medical Devices needs to provide compliance with multiple standards. Those standards usually aim to provide a framework to follow, which would minimize errors and potential harm to the end users (patients or caregivers).

For software development, IEC 62304 gains more attention when it comes to showing compliance for software validation activity. It is an international standard recognized by European and USA markets. IEC 62304 composes of multiple activities, and source code development is one step of many. This article will focus on the first activity required during Medical Software development – the basics of Risk Management.

IEC 62304 has been written to work within existing Quality and Risk Management Systems, mainly with ISO 13485 and ISO 14971. It will be assumed that the reader has at least basic familiarity with those two standards.

Software Safety Classification

Let us imagine a generic Software System that is to be embedded into a Medical Device. If the manufacturer wishes to follow IEC 62304, Software Safety Classification (called further SSC) needs to be established. The standard specifies 3 categories of software failure:

- A: no injury or damage to health is possible,

- B: injury is possible, but not serious (reversible),

- C: death or serious injury is possible.

Unless otherwise documented, Software System is to be classified as a C-class by default. Refer to figure 1 for a detailed algorithm for SSC determination. The higher classification, the more detailed documentation (and proof in form of tests) is needed to be prepared by the manufacturer to show that he has done everything possible to reduce the risk of hazardous situations or harm to the patient.

Constraints and analysis

There are two constraints that the standard rises:

A. “Probability of a software failure should be assumed to be 1”. If the manufacturer (or an auditor!) can write a single-fault, worst-case scenario, that the software will fail, it shall be considered in the SSC evaluation.

B. “Only risk control measures not implemented within the Software System shall be considered”. It is not possible to require the Software System to be risk mitigation for the risk that it introduces for the sake of SSC evaluation. Of course, those requirements should be included later on, but alone are not enough to reduce the classification.

During the analysis, multiple approaches should be considered and evaluated vs Intended Use and Foreseeable Misuse (example):

- usability issues (too complex menu leads to an unwanted operation, that leads to the harm),

- compatibility issues (new software revision does not work correctly under old/legacy hardware),

- service-related issues (after software upload, the wrong configuration is selected by a technician),

- functional-hardware issues (unwanted movement of the device, caused by an external EMC field),

- functional-software issues (unwanted movement of the device, caused by a mistake in the control algorithm), this includes software developers’ bugs and errors.

SSC evaluation is a good time to double-check if all risks have been captured. As an input to this step, Top-Down Analysis or DFMEA can be used. Unfortunately, there are times that software or hardware engineers are not a part of the Risk Management Team. Because of that, software fault can be classified as “customer annoyance”, while in reality, it can contribute to a hazardous situation that can result in harm to a patient.

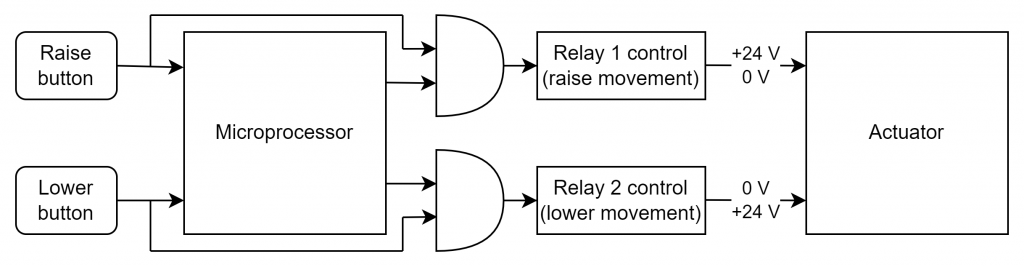

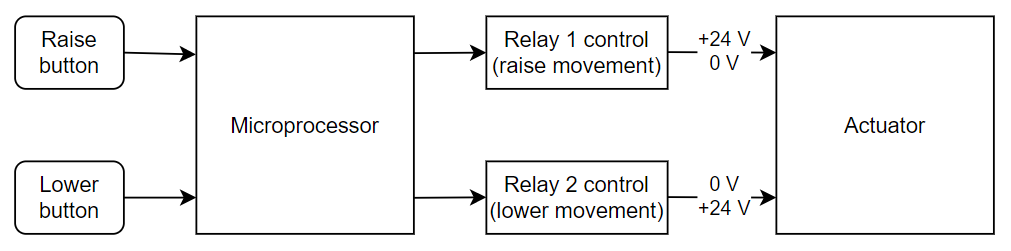

Exemplar Medical Device

To show the issue at hand, a more detailed description of a Medical Device needs to be provided. Software System will not be segregated. Let’s imagine a new device introduced to the market, that helps the caregiver transport the patient from point A to B. The software is responsible for receiving inputs from the caregiver and controlling the relays that turn on the actuator to raise/lower the patient. On the PCBA level, it was realized most straightforwardly (see figure 2).

The exemplar Software System could consist of the following functions:

- read the button state,

- control relay to create upwards or downwards movement.

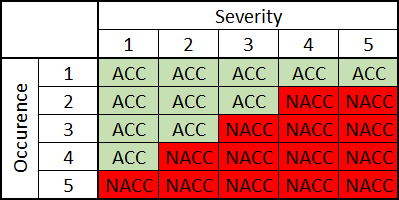

The Risk Management Team (which lacked software engineering skills) delivered the following table (table 1) and risks in the Top-Down risk assessment:

- PCBA/electrical fault, permanently gives control signal to the actuator resulting in an uncommanded upwards movement, the patient may fall, Occurrence 1, Severity 4,

- software fault: device not responding, customer annoyance, Occurrence 1, Severity 1.

All pinpointed risks compared to Table 1 are acceptable. There are no more faults connected to the Software System other than customer annoyance. In that case, can the Software System be assumed to be A-class?

With the “probability of a software failure should be assumed to be 1” in mind, a detailed analysis could provide one more fault. If it is possible for the hardware to fail in such a way to give a Severity 4 event, is it theoretically possible that the software itself could create the same situation?

It is not about whether a software developer is good or bad, mistakes can happen everywhere. Even the smallest thing like misplacing the position of the closing bracket can impact the functionality. Being tired and under the pressure of the time to market does not help either. If the Software System was under-classified (A-class instead of C), then the functional test may not cover this particular functionality-risk relation. And in the end, the patient may be affected.

Risk assessment

The full risk assessment should look like this:

- PCBA/electrical fault, permanently gives control signal to the actuator resulting in an uncommanded upwards movement, the patient may fall, Occurrence 1, Severity 4,

- software fault: device not responding, customer annoyance, Occurrence 1, Severity 1,

- software fault, permanently gives control signal to the actuator resulting in an uncommanded upwards movement, the patient may fall, Occurrence ?, Severity 4.

From this point, multiple questions can arise:

- how does one calculate the Occurrence for the software that is new to the market?

- how does including in the Intended Use phrase “the caregiver should always be present in-patient transport activity” could help in reducing the Occurrence?

- can one say that the Occurrence is 1, based on historical data (inhouse or worldwide); it is questionable as the software is likely to be different (unless legacy)?

- assume “1”, as Occurrence 5, and be left with Occurrence 5, Severity 4, not acceptable risk?

- write that there is patient protection that prevents falling (design verification)?

How deep one should go? What is foreseeable? Should a theoretically possible situation be assumed:

- the patient is being mounted on the Medical Device by the caregiver, who is installing the patient protection gear,

- when the least expected, the uncommanded movement starts,

- harm happens to both the patient and the caregiver (as a worst-case scenario)?

Risk Control Measures

It is a common mistake to write the software first, and then worry about the documentation and standard. If the theoretical situation above could be caught up in the R&D phase, with a few weeks of delay to the project, the solution could be provided.

On the other hand, if this issue persisted and showed itself on the markets, field action could be a very costly operation (in both the money and fame category). Additionally, it is the Medical Device manufacturer’s responsibility to reduce the risk to the maximum extent. How, for a given situation, could it be reduced further?

If the design is in the prototype phase, it is possible to make changes to the PCBA. With input provided from the SSC, the hardware team is capable of think and implementing so-called hardware mitigations. An example of such mitigation is shown in figure 3. By adding simple logical circuits, the software will not be able to control the actuator. Even if the software fails (under single fault) and the caregiver tries to use the device, its control will still be intentional, so no harm could be introduced.

Before this mitigation could be used (to possibly claim reduction of the Occurrence to 1), tests need to be performed and documented. They need to prove that, under any foreseeable conditions, this circuit will be safe and provide demanded functionality.

Questions might be asked, why to use a microprocessor for such a trivial control system when hardware mitigation could be used without software. In real-world applications, it might not be the relays that control the actuator, but transistors. It is desired to implement a soft-start and soft-stop action, so the patient is not subjected to abrupt movements at the start and stop.

Also, the software might take measurements of the actuator current to see if the medical device is not stuck onto some obstacle, avoiding damage in case of caregiver fault.

Summary

Software Risk management activity, if done correctly at the beginning of the project, could save a lot of time and effort later, when the critical-safety bugs will become visible (it is not a question “if” but “when”). If threats could be identified, the development could be led in such a way to prevent the hazardous situation from happening, based on the requirements imposed on the development.

Every Software System needs to be treated individually. In Sii, we have experienced engineers that can help you with risk assessment, hardware and software development, creation of documentation, tests, certifications, and more. Feel free to contact us.

Bonus content

For the circuit presented in figure 3, what will happen if the user presses both the Raise and Lower buttons? All of course is depending on the hardware implementation, but for the given example (one actuator driven by 2 relays), there is a high probability of making a short circuit on a 24 V power supply.

This issue was missed by the Risk Management team and come up as a complaint from the end customer.

Can we protect the circuit against this short circuit? Yes, the microprocessor could have a software requirement to prevent driving the relays if both buttons are pushed. And unless the hardware team solves this before the release, this would increase the SSC for this Software System.

But this is a theoretical story for another article 🙂

***

If you are interested in the subject of healthcare and regulated process, I encourage you to read the articles of our experts: Radiosurgical technology advances in the fight against cancer and Agile w procesach regulowanych

***

If you want to find out what it’s like to work as a Compliance and Medical Device Software Validation Specialist, listen to Marcin:

Leave a comment