Best practices in performance testing programming in the context of k6 encompass a range of techniques and approaches that aid in efficient, reliable, and effective performance testing of applications. Additionally, these best practices aim to demonstrate how to create test scenarios that are easy to build and maintain.

Simplifying test scenarios

Up until now, we have focused on creating simple test scenarios. However, even with the reuse of many elements within a scenario, there is potential for further simplification.

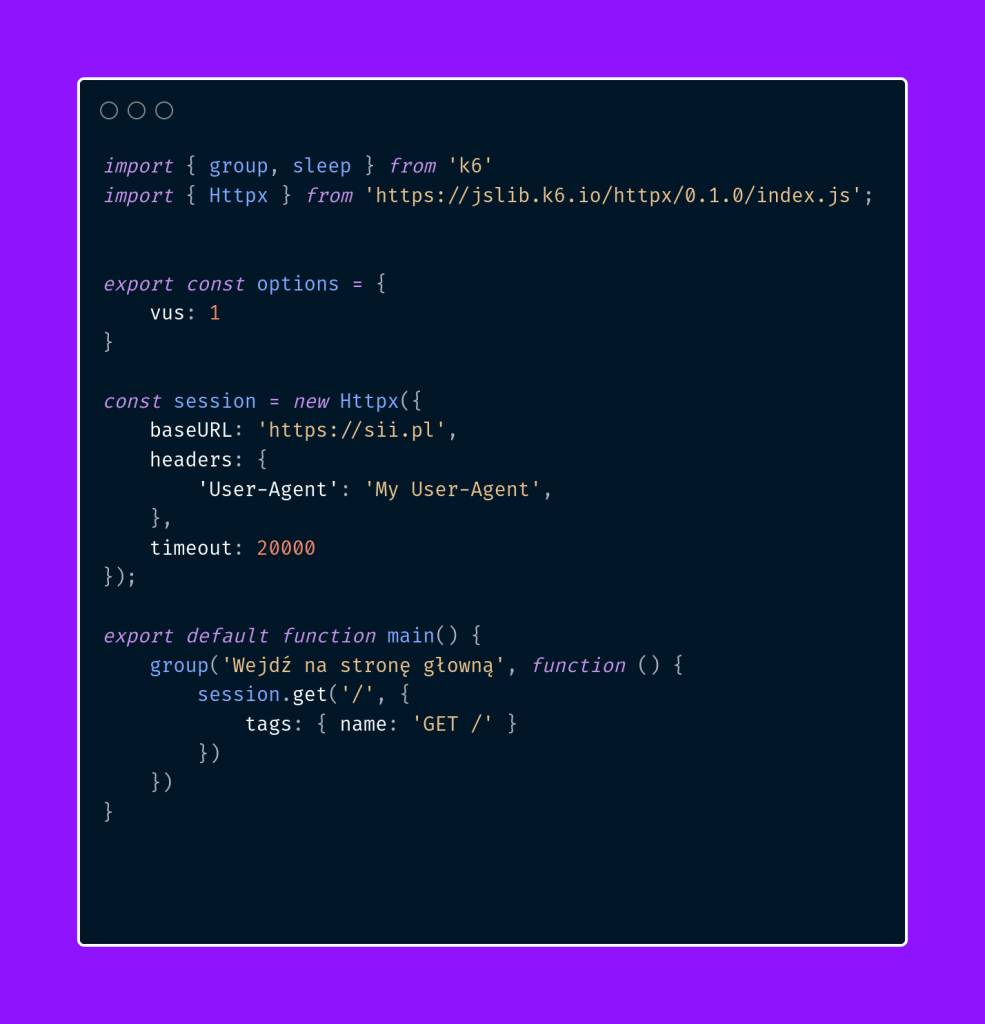

To achieve this, we will utilize the httpx module based on the k6 API. This module changes the approach to creating test scenarios, making them more intuitive and easier to maintain. It is considered one of the many good practices in k6.

The core of using the httpx module is the creation of a user session. This approach is reminiscent of that used in tools like JMeter. On the session object, we can define fields such as the base URL or headers, which will be included in every subsequent request. Furthermore, it’s possible to define global settings, such as the timeout duration in case of no application response.

Let’s take a look at an example test scenario.

In the above example, we no longer need to define headers or the full application URL each time.

Covering HTTP requests for static resources

Another interesting practice is covering HTTP requests for static resources. Static files often change on a webpage, which can lead to cumbersome test scenario edits with every change. Therefore, we will create a utils directory to house our helper functions. Next, we’ll place a file named helper.js with the following content in this directory:

The above file contains two functions. The first one is already familiar to us and is responsible for pausing the execution of the script. The second function, on the other hand, is used to extract all requests related to static resources on the page, and then it sends a GET request to each of them. This function could be expanded to include proper tagging and checking whether the domain belongs to those of interest.

Moreover, we could add the ability to perform six simultaneous asynchronous calls to static resources at once. This would make our tests even more closely resemble browser behavior.

Creating a test suit

Based on the knowledge gained throughout the series, let’s try to create two test scenarios that will be based on the Sii application. Let’s assume we want to cover the following simplified test scenarios:

- Scenario named search-article.js – responsible for searching for articles on the page and then accessing the one of interest:

| Step number | Test step | Expected outcome |

| 1 | User navigates to the homepage | User is on the homepage |

| 2 | User searches for 1 of the 5 pre-defined articles | The searched article is displayed to user |

| 3 | User clicks on the searched article | The article’s name is displayed to the user |

Implementation in k6:

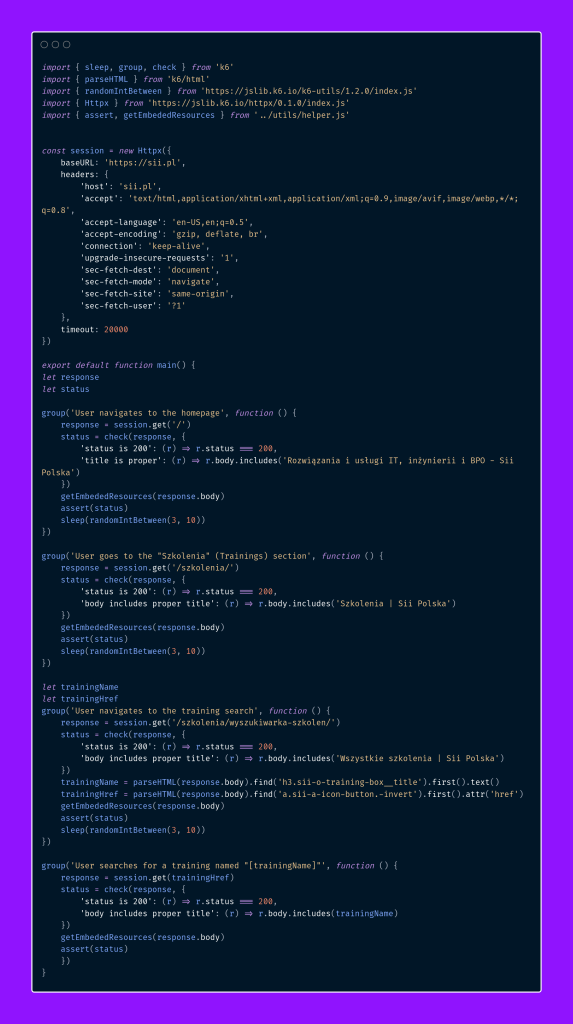

- Scenario named search-training.js – this scenario searches for a training of interest:

| Step number | Test step | Expected outcome |

| 1 | User navigates to the homepage | User is on the homepage |

| 2 | User goes to the “Szkolenia” (Trainings) section | User is in the “Szkolenia” section |

| 3 | User navigates to the training search | User is in the training search section |

| 4 | User searches for a training named “Zostań Analitykiem Biznesowym” | The offer for the training is displayed |

Implementation in k6:

Scenario Configurations

In the previous sections, we discussed various types of executors used in k6. This is important because if we wanted to change the type of executor, we would need to edit the code inside the scenario. Another option is duplicating the same test scenario.

Both of these solutions are cumbersome to maintain in the long run and impractical. Therefore, different test configurations are used, which can be universally applied to each scenario. Let’s take a look at two configurations:

- smoke.json – a configuration responsible for a single run of the scenario.

- load.json – target configuration that increases the load along with the test duration:

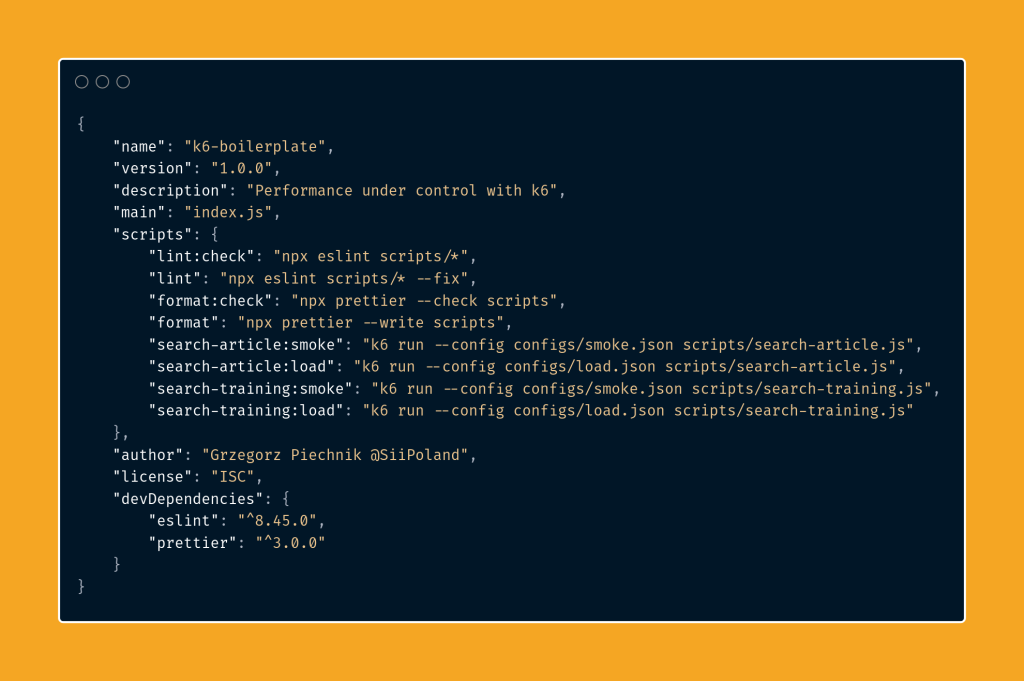

Defining custom commands

The final stage involves defining commands to run the target scenarios. We will utilize the familiar mechanism from the package.json configuration file that we have already encountered.

We have defined four commands, where the first ones are responsible for running two tests with a single iteration configuration. In the latter ones, the execution is for the load testing configuration.

One aspect that could be improved is dynamically defining the number of virtual users used in the second configuration scenario. This enhancement would make the configurations even more versatile for each scenario. After all, the appropriate load for the first scenario is likely to differ from that of the second scenario. This could be achieved using environment variables.

Summary

In the sixth article of our series, we discussed best practices for writing performance test scenarios in k6. We created sample test scenarios and configured them to enhance the versatility of our testing framework.

Our journey with k6 is far from over – we can continue developing the framework and tailor it to our needs to ensure the quality and reliability of our applications. In the next part, we will delve into using Grafana, InfluxDB, and Docker.

***

If you haven’t had a chance to read the articles in the series yet, you can find them here:

- Performance under control with k6 – introduction

- Performance under control with k6 – recording, parametrization, and running the first test scenario

- Performance under control with k6 – metrics, quality thresholds, tagging

- Performance under control with k6 – additional configurations types of scenario models and executors

- Performance under control with k6 – framework initialization

In addition, I encourage you to check out the project’s Repository.

Leave a comment