Pseudonymisation and anonymization of Personal Identifiable Information (PII) are often confused. Both techniques are relevant within the General Data Protection Regulation (GDPR) context. This confusion arises because, in legal terms, personal data is information that can directly identify a person. Still, data that doesn’t directly identify a person is also considered personal data.

Pseudonymization is a method that allows you to switch the original data set (for example, e-mail or a name) with an alias or pseudonym. It is a reversible process that de-identifies data but allows for re-identification later on if necessary.

Anonymization is a technique that irreversibly alters data so an individual is no longer identifiable directly or indirectly.

Although both are used to secure data, they are not the same. The following figure illustrates the distinctions between the two techniques:

Compared to anonymization, pseudonymization is a much more sophisticated option since it leaves you the key (also known as a crypto key) to “unlock” the data. This way, data is not regarded as immediately identifiable, yet it is also not anonymized, so it retains its original value.

Anonymous data or non-personal data are combined to the point that particular events can no longer be associated with a specific individual. This increases data privacy and enables organizations to comply with GDPR and other data protection rules.

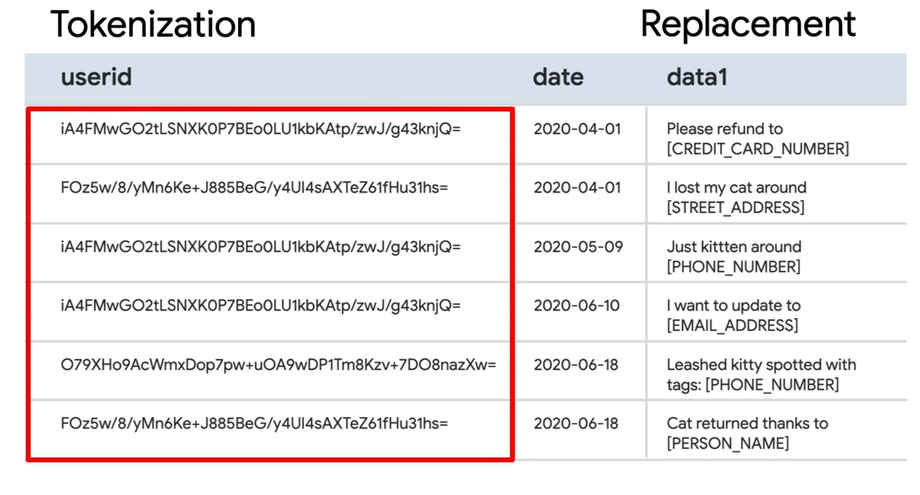

To demonstrate pseudonymization, consider tokenizing each row such that it may be returned to its original value. To demonstrate anonymization, try changing all values in a certain column to ******** or null, thereby making the data meaningless.

A more detailed comparison can be seen in this table:

| Pseudonymization | Anonymization |

| Protects data at a record level | Protects entire datasets (columns) |

| Re-identification possible | Re-identification not possible |

| Still considered PII | Not considered PII according to GDPR |

| Business value retained | Business value lost |

| More sophisticated, harder to implement | Simpler, easier to implement |

De-identification and re-identification with Cloud DLP

De-identification and re-identification with Cloud DLPDe-identification and re-identification of PII are critical processes for organizations that handle sensitive data. Google Cloud offers a couple of powerful solutions to this problem. One of them is through Cloud Data Loss Prevention (DLP) service, which is now a part of Sensitive Data Protection. It provides a suite of tools for de-identifying and re-identifying PII.

The de-identification process involves removing or obfuscating any information that can be used to identify an individual while still preserving the utility of the data. Cloud DLP offers several techniques for de-identification, including redaction, replacement, masking, tokenization, or bucketing.

Different transformations can be applied to various data objects, including unstructured text, records, and images. They help organizations effectively de-identify PII within their datasets, ensuring compliance with data privacy regulations and minimizing the risk of unauthorized exposure.

The vast majority of methods are meant to be used on values in tabular data that are marked as a certain infoType. You can make and handle it with de-identification templates within DLP. These templates let you use the same set of changes repeatedly. You can also keep setup information separate from how requests are carried out.

DLP’s deidentifyConfig allows for many different transformations, such as primitiveTransformations and infoTypeTransformations, to handle sensitive data properly. These features allow businesses to handle and protect their private data on a large scale while ensuring they follow data privacy laws and lowering the risk of data being seen by people who aren’t supposed to.

For example, here is a de-identification template that masks all characters in email addresses, except for “.” and “@”:

"deidentifyConfig":{

"infoTypeTransformations":{

"transformations":[

{

"infoTypes":[

{

"name":"EMAIL_ADDRESS"

}

],

"primitiveTransformation":{

"characterMaskConfig":{

"maskingCharacter":"#",

"reverseOrder":false,

"charactersToIgnore":[

{

"charactersToSkip":".@"

}

]

}

}

}

]

}

},

Re-identification, on the other hand, involves matching de-identified data with its original source. This is useful in cases where the de-identified data needs to be re-associated with its original source for analysis or other purposes.

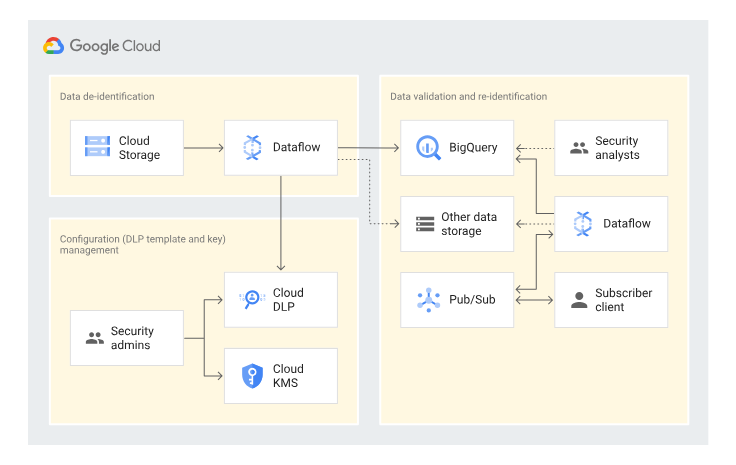

Reference architecture of data pseudonymization using Google Cloud products

This article will explore using Cloud DLP with Cloud Dataflow (Google’s data processing engine) to pseudonymize PII data using a de-identification template that uses a deterministic crypto tokenization.

Dataflow is a fully managed service for executing data processing pipelines. It supports parallel execution of tasks, auto-scaling, and seamless integration with other Google Cloud services, making it an ideal choice for processing large volumes of data.

Here, we use it to read the data from a source that can be anything: object storage, RDBMS, NoSQL database, data warehouse, API. Then, we use a de-identification template secured with a crypto key to perform a recordTransformation on our data. Dataflow calls Cloud DLP API with the data to be transformed and retrieves data obfuscated by one of the available techniques.

In this example, we use tokenization, which is a solution for the pseudonymization of the data. It replaces the original data with a deterministic token, preserving referential integrity. You can use the token to join data or use the token in aggregate analysis. You can reverse or re-identify the data using the same key you used to create the token. This key is stored in Cloud Key Management Service (KMS), also called a wrapped key.

You can think of the whole process as converting all the rows containing IDs into different types. It allows you to query and aggregate the data, but you no longer see the original data unless you have permission to re-identify it.

By adhering to Google’s best practices and leveraging DLP templates, organizations can effectively manage and secure their sensitive data at scale while also ensuring compliance with data privacy regulations and minimizing the risk of unauthorized exposure.

Provisioning of the DLP template

First, we must create a blueprint for our data pseudonymization techniques. For this example, I’ll utilize the official Google Cloud Terraform provider.

The following HCL code demonstrates how to create a Cloud DLP de-identification template that can be used to pseudonymize data:

resource "google_data_loss_prevention_deidentify_template" "dlp_template_tokenization" {

parent = "projects/${var.project_id}/locations/${local.regions.primary}"

display_name = "dlp_pii_tokenization"

deidentify_config {

record_transformations {

field_transformations {

fields {

name = "PII_FIELD"

}

primitive_transformation {

crypto_deterministic_config {

crypto_key {

kms_wrapped {

wrapped_key = data.google_secret_manager_secret_version.dlp_wrapped_key.secret_data

crypto_key_name = "projects/${var.project_id}/locations/${local.regions.primary}/keyRings/${var.project_id}/cryptoKeys/dlp-${var.environment}"

}

}

}

}

}

}

}

}

We apply a recordTransformation of the deterministic crypto type, which requires a crypto key and a wrapped key. A wrapped key is a base64-encoded data encryption key, while a crypto key is a Cloud KMS / HSM / EKM key used to decrypt a wrapped key. It is up to you how you create them.

The complete list of possible transformations may be found in the Cloud DLP API Reference. [7] After applying this with Terraform, the Cloud DLP de-identification template will appear in your GCP project.

Data processing with Apache Beam Python SDK

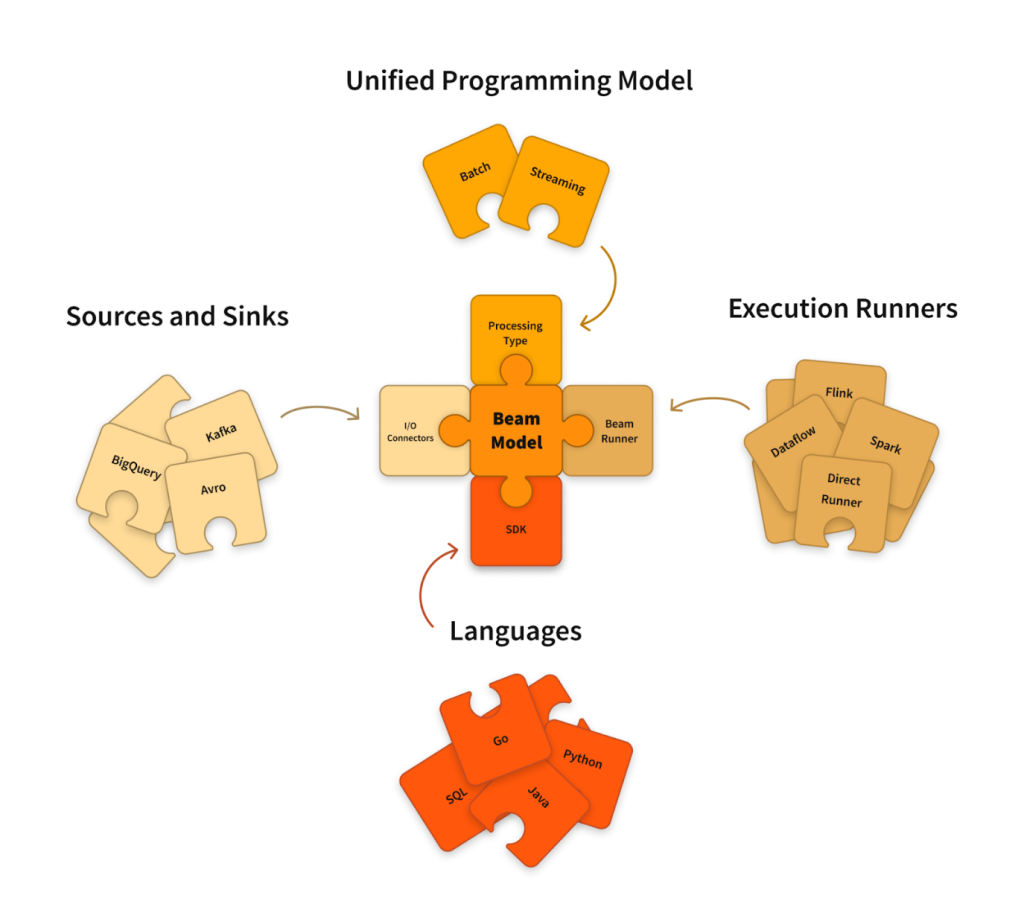

After deploying DLP-related resources, we can finally utilize Dataflow to pseudonymize the PII data. Creating and executing jobs in Dataflow requires developing code using the Apache Beam framework.

Apache Beam is an open-source, unified model for defining batch and streaming data-parallel processing pipelines. Using one of the open-source Beam SDKs, you build a program that defines the pipeline. The pipeline is then executed by one of Beam’s supported distributed processing back-ends, which include Apache Flink, Apache Spark, and Google Cloud Dataflow. [8]

The following Apache Beam Python SDK pseudocode snippet demonstrates how to achieve pseudonymization on the fly while migrating data from one source to another:

import logging

import apache_beam as beam

from apache_beam.io.gcp.bigquery import BigQueryDisposition, WriteToBigQuery

from apache_beam.io.jdbc import ReadFromJdbc

from apache_beam.options.pipeline_options import PipelineOptions

from google.cloud import dlp_v2

from google.cloud.dlp_v2 import types

class CustomPipelineOptions(PipelineOptions):

@classmethod

def _add_argparse_args(cls, parser):

# JDBC parameters

parser.add_value_provider_argument("--jdbc_url", type=str, help="JDBC connection URL")

parser.add_value_provider_argument("--driver_class_name", type=str, help="JDBC driver class name")

parser.add_value_provider_argument("--username", type=str, help="JDBC connection username")

parser.add_value_provider_argument("--password", type=str, help="JDBC connection password")

parser.add_value_provider_argument("--src_table_name", type=str, help="Source table name")

# BigQuery parameters

parser.add_value_provider_argument("--project_id", type=str, help="GCP project ID")

parser.add_value_provider_argument("--dataset_id", type=str, help="BigQuery dataset ID")

parser.add_value_provider_argument("--dest_table_name", type=str, help="BigQuery destination table name")

# DLP parameters

parser.add_value_provider_argument("--project", type=str, help="GCP project ID for DLP")

parser.add_value_provider_argument("--location", type=str, help="DLP service region/location")

parser.add_value_provider_argument("--deidentify_template_name", type=str, help="De-identify template name in DLP")

parser.add_value_provider_argument("--columns_to_pseudonymize", type=str, help="Comma-separated list of columns to pseudonymize")

class PseudonymizeData(beam.DoFn):

def __init__(self, options):

self.options = options

self.client = None

def start_bundle(self):

"""Initialize resources at the start of a bundle."""

# The DLP client is initialized here to ensure it's set up in the worker's context

self.client = dlp_v2.DlpServiceClient()

def deidentify_table(self, table, project, location, deidentify_template_name, columns_to_pseudonymize):

"""De-identify a table using the DLP API."""

parent = f"projects/{project}/locations/{location}"

headers = [types.FieldId(name="PII_FIELD" if col in columns_to_pseudonymize else col) for col in table[0].keys()]

rows = [types.Table.Row(values=[types.Value(string_value=str(cell)) for cell in row.values()]) for row in table]

table_item = types.ContentItem(table=types.Table(headers=headers, rows=rows))

deidentify_request = types.DeidentifyContentRequest(parent=parent, deidentify_template_name=deidentify_template_name, item=table_item)

response = self.client.deidentify_content(request=deidentify_request)

deidentified_table = [{col: value.string_value for col, value in zip(table[0].keys(), row.values)} for row in response.item.table.rows]

return deidentified_table

def process(self, element):

"""Process each element (a table) in the PCollection."""

project = self.options.project.get()

location = self.options.location.get()

deidentify_template_name = self.options.deidentify_template_name.get()

columns_to_pseudonymize = self.options.columns_to_pseudonymize.get().split(",")

deidentified_table = self.deidentify_table(element, project, location, deidentify_template_name, columns_to_pseudonymize)

yield deidentified_table

def main(argv=None):

pipeline_options = PipelineOptions(argv)

custom_options = pipeline_options.view_as(CustomPipelineOptions)

with beam.Pipeline(options=pipeline_options) as p:

(

p

| "ReadFromJdbc"

>> ReadFromJdbc(

table_name=custom_options.src_table_name.get(),

driver_class_name=custom_options.driver_class_name.get(),

jdbc_url=custom_options.jdbc_url.get(),

username=custom_options.username.get(),

password=custom_options.password.get(),

)

| "PseudonymizeData" >> beam.ParDo(PseudonymizeData(custom_options))

| "WriteToBigQuery"

>> WriteToBigQuery(

project=custom_options.project_id.get(),

dataset=custom_options.dataset_id.get(),

table=custom_options.dest_table_name.get(),

create_disposition=BigQueryDisposition.CREATE_IF_NEEDED,

write_disposition=BigQueryDisposition.WRITE_TRUNCATE,

method=WriteToBigQuery.Method.FILE_LOADS,

)

)

if __name__ == "__main__":

logging.getLogger().setLevel(logging.INFO)

main()

We build a pipeline in this Python SDK code sample that reads a table from a PostgreSQL database. We create pseudonymize_row(), which leverages the Cloud DLP API to tokenize the data. The dlp_client.deidentify_content() function uses the supplied de-identification template to de-identify items. We pseudonymize fields from all rows based on the ”pii_columns” pipeline option. We receive tokenized data and write it back in a BigQuery table.

The following steps are intentionally left out: building a Dataflow template, either classic or flex, creating a Google Cloud Storage (GCS) bucket to store temporary data, and creating a BigQuery dataset and table. You should execute these steps in conjunction with your existing configuration and requirements.

Triggering and scheduling a Cloud Dataflow job with Apache Airflow

When you create a Dataflow template, nothing prevents you from scheduling the application to migrate a daily batch of fresh pseudonymized data to your destination.

A plethora of options for services and solutions are available to you. I suggest the tried-and-true method of orchestrating ETL processes using Apache Airflow. You can use Cloud Composer, a managed Airflow service on Google Cloud.

Here’s an example of how to trigger a Dataflow application with a Flex template using an official Apache Airflow operator:

from airflow.decorators import dag

from airflow.providers.google.cloud.operators.dataflow import DataflowStartFlexTemplateOperator

from airflow.timetables.trigger import CronTriggerTimetable

from airflow.utils.dates import timedelta, datetime

from dependencies.callbacks import on_failure_function

default_args = {

"owner": "Someone",

"email": "[email protected]",

"on_failure_callback": on_failure_function,

"retries": 1,

"retry_delay": timedelta(minutes=1),

}

@dag(

dag_id="dataflow_pii",

default_args=default_args,

schedule=CronTriggerTimetable("0 0 * * *", timezone="UTC"),

start_date=datetime(2024, 1, 1),

catchup=False,

is_paused_upon_creation=True,

tags=["dataflow", "pii", "example"],

)

def dataflow_pii_dag():

DataflowStartFlexTemplateOperator(

task_id=f"run_dataflow_pii_job",

location=REGION,

project_id=PROJECT_ID,

body={

"launchParameter": {

"jobName": dataflow_job_name,

"parameters": dataflow_parameters,

"environment": dataflow_environment,

"containerSpecGcsPath": f"gs://{DATAFLOW_BUCKET}/tag/{TEMPLATE_VERSION}/{TEMPLATE_NAME}.json",

}

},

cancel_timeout=360,

deferrable=True,

append_job_name=True,

execution_timeout=DF_TASK_TIMEOUT,

)

dataflow_pii_dag()

In this example, we define a Directed Acyclic Graph (DAG) that schedules the pseudonymization process to run daily. The DataflowStartFlexTemplateOperator is used to trigger the Dataflow job. Various other operators can run Dataflow jobs in different ways.

The launchParameter dict contains not only the job name but also parameters for the template, such as source and destination configuration, addresses for sensitive values stored in Secret Manager, and any other parameters that may be used as application variables.

The Dataflow environment includes values set at runtime, such as the maximum number of workers, the compute engine availability zone, the service account used to run the job, the machine type size, the networking configuration for VMs, and more. ContainerSpecGcsPath is the Cloud Storage path to the serialized Flex template JSON file.

Summary

By following these steps, organizations can leverage Cloud DLP and Dataflow to pseudonymize PII data securely and scalable. The use of Terraform for resource provisioning and Dataflow for data processing enables efficient and consistent management of sensitive information. This is feasible because of de-identification templates, which are an excellent method to keep track of how sensitive data is handled.

Apache Airflow, a popular open-source workflow management platform, can schedule the Dataflow job. By doing so, organizations can automate the pseudonymization process and ensure the secure handling of sensitive data.

Sources

- What are the Differences Between Anonymisation and Pseudonymisation, Privacy Company

- Pseudonymization according to the GDPR

- De-identify and re-identify sensitive data

- De-identifying sensitive data

- De-identification and re-identification of PII in large-scale datasets using Sensitive Data Protection

- Terraform provider for Google Cloud

- DeidentifyConfig

- Apache Beam Overview

- Google Cloud Dataflow Operators

Leave a comment