Although AWS SageMaker is a great platform that organizes and greatly simplifies all data science-related activities, the first experience of deploying custom machine learning models can be cumbersome. Fortunately, we’ve been down this road many times, and we’ll guide you through your first deployment, avoiding all the potential pitfalls.

AWS SageMaker is a powerful tool for developing and deploying machine learning models. It makes it possible to scale models, develop them with a variety of frameworks, and integrate them with other AWS services such as model monitoring. However, its versatility and scalability come at a price — it requires extensive knowledge of the platform making it hard for beginners to get started. Things get even more complex when you try to deploy custom models, especially those developed outside the platform.

The article is here to help you with the above. We are going to show how you can easily deploy custom ML models using Docker, FastAPI, and AWS Sagemaker in 9 simple steps:

- Train the model wherever it suits you.

- Dockerize the inference code.

- Push the Docker image to Amazon Elastic Container Registry (ECR).

- Save the model artifacts on S3 bucket.

- Create an Amazon SageMaker model that refers to the Docker image in ECR.

- Create an Amazon SageMaker endpoint configuration that specifies the model and the resources to be used for inference using the AWS SDK (boto3).

- Create an endpoint configuration.

- Deploy your model.

- Use Boto3 to test your inference.

Train the model locally or in a cloud-based environment

For the sake of simplicity, we are going to use the pre-trained HuggingFace model, a fine-tuned BERT model that is ready to use for Named Entity Recognition. It recognizes four types of entities: Locations (LOC), Organisations (ORG), Persons (PER), and Miscellaneous (MISC). In reality, this could be any model you’d like to deploy.

Dockerize the inference code

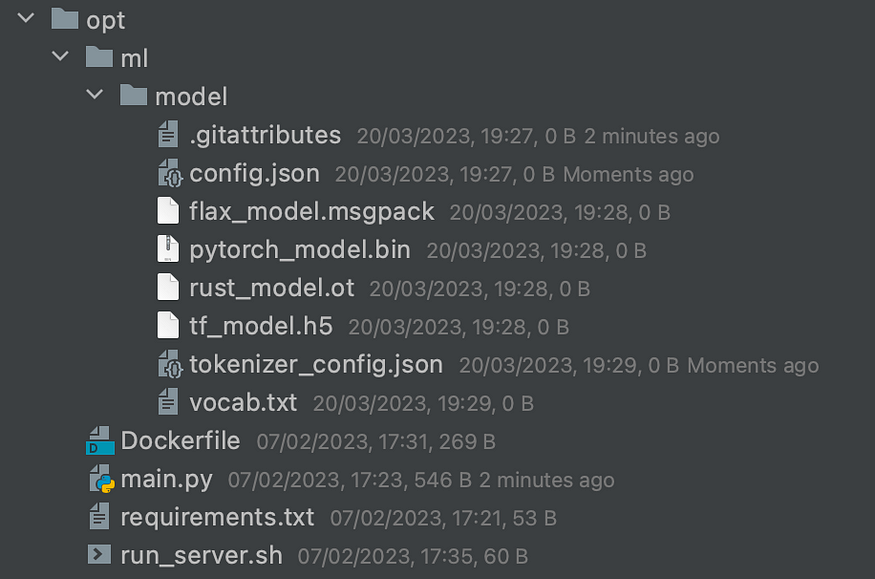

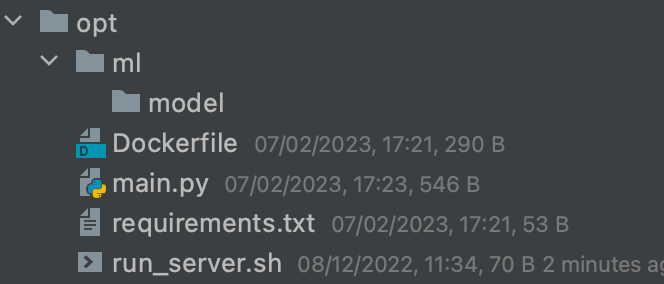

First of all, you need to create a specific directory and file structure (see below) within your working directory.

Directory opt/ will be your root. The main.py should contain the inference code, that reads the model from a specific path: ml/model and exposes REST API for performing predictions — we are using FastAPI which is a high-performance web framework for developing APIs with Python. Let’s take a look at the script, especially the invocations and ping entrypoints that are required by AWS SageMaker.

# main.py

from fastapi import FastAPI, Request

from transformers import pipeline

MODELS_PATH = "ml/model/"

app = FastAPI()

@app.get('/ping')

async def ping():

return {"message": "ok"}

@app.on_event('startup')

def load_model():

classifier = pipeline("ner", model=MODElS_PATHA)

logging.info("Model loaded.")

return classifier

@app.post('/invocations')

def invocations(request: Request):

json_payload = await request.json()

inputs = [records["scope"] for records in json_payload]

output = [{"prediction": classifier(input)} for input in inputs ]

return output

- ping() is used by AWS SageMaker to verify your model works.

- load_model() is an event handler and runs on service ‘startup’. It means that this function will be executed once and only the application starts up. It’s a great place to load the model into memory and this is what we do.

- invocations() is a REST POST entrypoint and here you should put your inference code. It means all what is required to perform the predictions by your model. It uses the await keyword to wait for other asynchronous functions to complete before continuing with their execution. In this case, when an asynchronous function is called, it doesn’t block the execution until it returns a result.

Then we have Dockerfile. It executes several command-line instructions step by step and creates a container based on the indicated image and requirements.

# Dockerfile

FROM python:3.8-slim

# copy the code and requirements

COPY main.py /opt/

COPY requirements.txt /opt/

COPY run_server.sh /opt/serve

WORKDIR /opt

# install required python stuff

RUN pip install -r requirements.txt

# setup the executable path

ENV PATH="/opt/:${PATH}"

Requirements.txt indicates what packages are required and to be installed while creating the docker image.

# requirements.txt

fastapi==0.89.1

uvicorn==0.20.0

transformers==4.25.1

Finally, there is run_server.sh. It starts the machine learning service hosting your model and inference code. The host parameter specifies the IP address the server will listen to, and the port parameter specifies the port number. Don’t modify them, as they come as required by SageMaker.

# run_server.sh

uvicorn main:app --proxy-headers --host 0.0.0.0 --port 8080

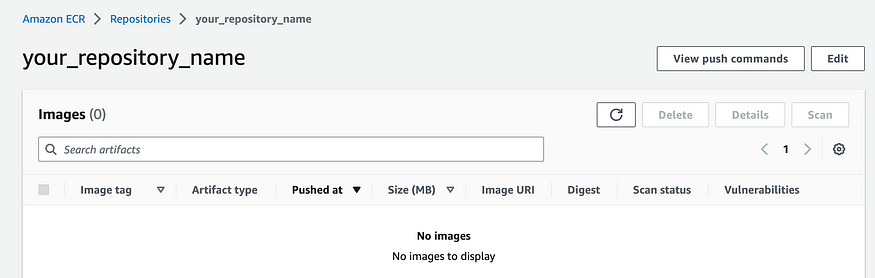

Push the Docker image to Amazon Elastic Container Registry (ECR)

- At first create a private repository in Amazon Elastic Container Registry where your image will be stored. Please follow the guideline to do so.

- Make sure that you have the latest version of the AWS CLI and Docker installed.

Go to the created repository and click on the View push commands button to view the steps to push an image to your new repository. Make sure you are in the location of the .Dockerfile and run the commands one by one.

aws ecr get-login-password --region us-east-1 | docker login --username AWS --password-stdin XXXXXXXXXX.dkr.ecr.us-east-1.amazonaws.com

docker build -t your_repository_name .

docker tag your_repository_name:latest XXXXXXXXXX.dkr.ecr.us-east-1.amazonaws.com/your_repository_name:latest

docker push XXXXXXXXXX.dkr.ecr.us-east-1.amazonaws.com/your_repository_name:latest

If all goes well, you should be able to see your ECR image on AWS.

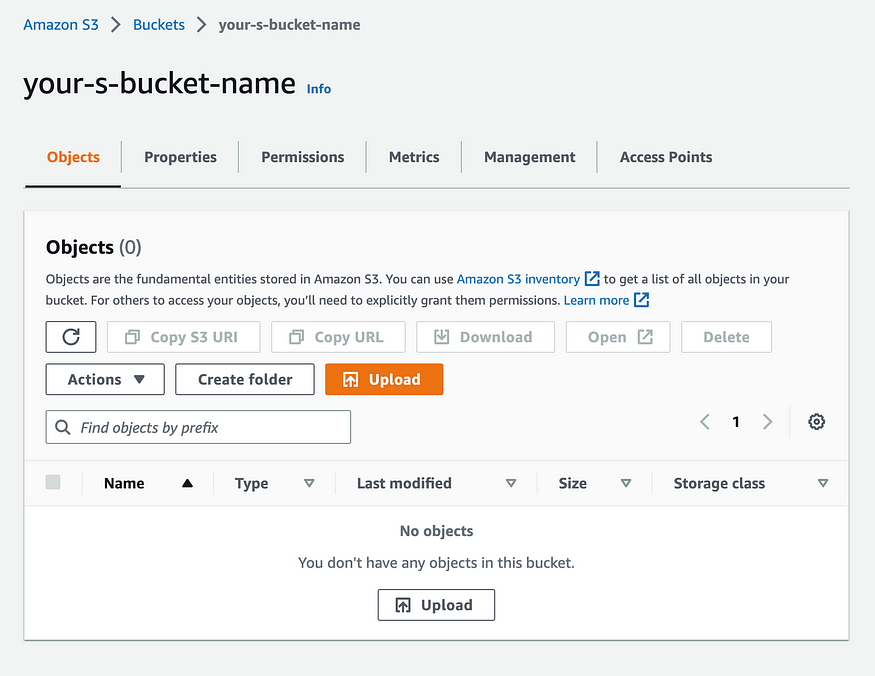

Save model artifacts on S3 bucket

Models must be packaged as compressed tar files (*.tar.gz) and saved on the S3 bucket. Let’s say your model’s directory looks like this:

To compress the model file make sure that you are in ml/ location and run the following commands:

cd opt/ml/

tar -czvf model.tar.gz model

Upload it to your desired S3 location.

Create an Amazon SageMaker model resource that refers to the Docker image in ECR

There are several options to deploy a model using SageMaker hosting services. You can programmatically deploy a model using an AWS SDK (for example, the SDK for Python (Boto3)), the SageMaker Python SDK, and the AWS CLI, or you can interactively create a model with the SageMaker console. This article presents the first of these options- SDK for Python (Boto3). You can run the following commands locally.

Set the values for the execution role, image URI, model URL, and model name. Call sagemaker.create_model() to create the model resource.

# create_aws_model.py

import boto3

# Create a SageMaker session

sagemaker = boto3.Session().client('sagemaker')

iam = boto3.client('iam')

ROLE = iam.get_role(RoleName='AWS_ROLE_NAME')['Role']['Arn']

IMAGE = "XXXXXXXXXX.dkr.ecr.us-east-1.amazonaws.com/your_repository_name:latest"

MODEL_URL = "s3://your-s-bucket-name/model.tar.gz"

MODEL_NAME = "model-name"

# Create a model

sagemaker.create_model(

ModelName=MODEL_NAME,

ExecutionRoleArn=ROLE,

PrimaryContainer={

'Image': IMAGE,

'ModelDataUrl': MODEL_URL

}

)

Create an Amazon SageMaker endpoint configuration

The next step is to create an Amazon SageMaker endpoint configuration that specifies the model and the resources to be used for inference.

# Create an endpoint configuration

endpoint_config_name = 'endpoint-config-name'

sagemaker.create_endpoint_config(

EndpointConfigName=endpoint_config_name,

ProductionVariants=[{

'InstanceType': 'ml.t2.medium',

'InitialInstanceCount': 1,

'ModelName': MODEL_NAME,

'VariantName': 'Variant-1'

}]

)

Deploy your model

Finally, you are ready to deploy your model — that is, create an entry point that hosts your model. Use the endpoint configuration specified above.

# Create an endpoint

endpoint_name = 'endpoint-name'

sagemaker.create_endpoint(

EndpointName=endpoint_name,

EndpointConfigName=endpoint_config_name

)

Use Boto3 to test your inference

Now for the final step. To make sure everything went well, create a test input and run it through the model. If you get an error, the AWS CloudWatch logs are a good place to debug.

import json

import boto3

import sagemaker

from sagemaker import get_execution_role

role = get_execution_role()

sagemaker = boto3.client("runtime.sagemaker")

test_input = [

{

"document_id": "1",

"scope": "Ron is Harry's best friend",

},

{

"document_id": "2",

"scope": "Hermione was the best in her class",

},

]

payload = json.dumps(test_input)

response = sagemaker.invoke_endpoint(EndpointName=endpoint_name,

ContentType='application/json',

Body=payload)

result = json.loads(response["Body"].read().decode())

print(result)

>> [{'prediction': [{'entity': 'I-PER', 'score': 0.9971058, 'index': 1, 'word': 'Ron', 'start': 0, 'end': 3}, {'entity': 'I-PER', 'score': 0.9923815, 'index': 3, 'word': 'Harry', 'start': 7, 'end': 12}]}, {'prediction': [{'entity': 'I-PER', 'score': 0.99259263, 'index': 1, 'word': 'Her', 'start': 0, 'end': 3}, {'entity': 'I-PER', 'score': 0.9645591, 'index': 2, 'word': '##mio', 'start': 3, 'end': 6}, {'entity': 'I-PER', 'score': 0.9782252, 'index': 3, 'word': '##ne', 'start': 6, 'end': 8}]}]

Summary

We have provided you with 9 simple steps on how to deploy custom models on AWS Sagemaker using FastAPI. This allows for greater flexibility and makes implementation a piece of cake.

Make sure that after deployment your model is operating correctly and efficiently. To quickly identify and address any issues in the production system, you might want to use model monitoring tools that are integrated into the AWS ecosystem. AWS Sagemaker provides CloudWatch Logs or CloudWatch Metrics to trigger alarms when certain thresholds are exceeded. By regularly updating your models and incorporating new data, you can improve their accuracy and ensure that they continue to provide value over time.

Hi. Thanks for the article. Wondering if it’s possible to use SocketIO similarly.