As a cybersecurity architect, I know it is crucial to stay ahead of emerging technologies and their implications for safety and ethics. One such technology is Meta’s Llama 3, a state-of-the-art language model that generates human-like text.

In this blog post, we’ll explore the concept of guardrails in Llama 3 and how they ensure the model’s safe and ethical use.

What are Guardrails in Llama 3?

Guardrails in Llama 3 are protective measures to prevent the model from generating harmful, unethical, or insecure content. These measures are vital in a world where AI’s capabilities are expanding rapidly, and the potential for misuse is high.

Why are Guardrails necessary?

AI models like Llama 3 can produce incredibly realistic text, both a strength and a potential risk. These models could generate inappropriate content, misinformation, or even malicious code without proper safeguards. Guardrails help mitigate these risks by filtering out harmful content and meeting ethical standards.

Critical Guardrails in Llama 3

- Content Safety – Llama 3 includes mechanisms to classify and filter out unsafe content, preventing the generation of text related to violence, self-harm, illegal activities, and other sensitive topics.

- Ethical and legal restrictions – the model is designed to avoid generating content that could lead to legal issues or ethical concerns, such as content related to illegal weapons, drugs, or sensitive personal information.

- Code Shield – a specific safeguard called Code Shield is intended to catch and prevent the generation of insecure code, ensuring that any code produced by the model adheres to security best practices.

- Programmable Guardrails – developers can define additional guardrails to control the model’s behaviour more precisely, tailoring the AI’s outputs to specific use cases and maintaining ethical boundaries.

Detailed breakdown of Guardrails

Content safety

Content safety in Llama 3 is managed through a sophisticated classification system that screens input prompts and generates responses. This system flags content that falls into predefined categories of harm, such as:

- Violent Crimes – any content that promotes, endorses, or facilitates violence against individuals or groups, including terrorism and hate crimes.

- Non-Violent Crimes – this includes fraud, theft, and other illegal activities that do not involve direct violence but can cause significant harm.

- Sensitive Personal Information – protects privacy by preventing the generation of content that includes or infers personal data without consent.

Ethical and legal restrictions

Llama 3’s ethical guardrails ensure compliance with legal standards and ethical norms. These include:

- No promotion of illegal activities – the model is restricted from generating content related to illegal weapons, drugs, and other regulated substances.

- Respect for intellectual property – prevents the generation of content that infringes on copyrights or trademarks.

For more information on ethical use, refer to Hugging Face’s Overview of Llama 3 and TechRepublic’s Cheat Sheet on Llama 3.

Code Shield

Code Shield is a unique feature in Llama 3 that focuses on secure code generation. It scans and mitigates insecure code patterns, ensuring that any code produced by the model adheres to best practices in cybersecurity. This is particularly important for developers who might use Llama 3 to generate scripts or automate tasks.

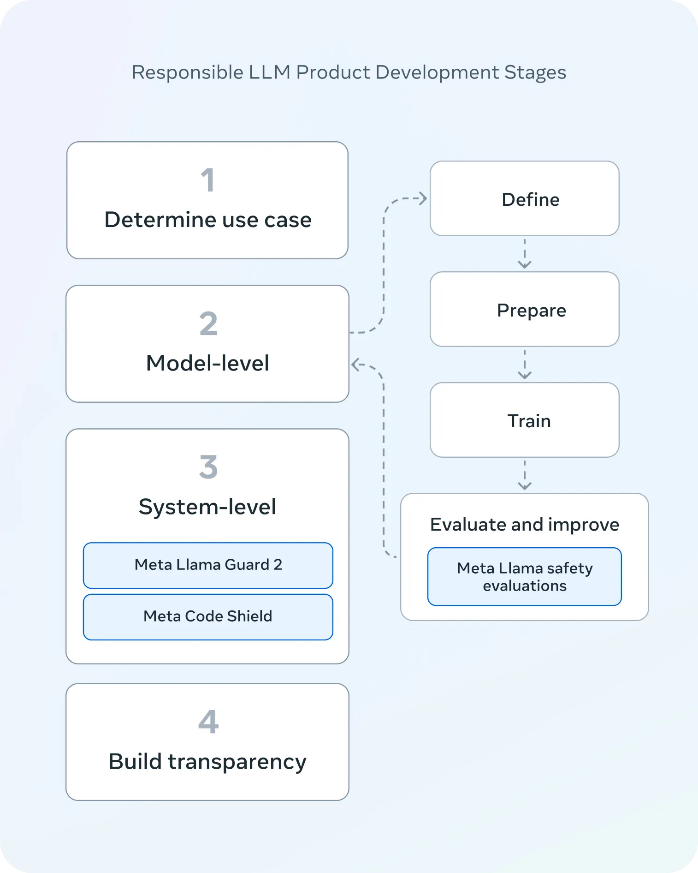

Technical deep dive – implementing Guardrails

For those interested in technical implementation, Llama 3 combines content classification and response filtering to enforce its guardrails. The model employs a safeguard known as Llama Guard 2, which classifies both inputs and outputs to determine their safety.

This involves using a probability threshold for the first token in a response to predict whether the content is safe. If the content is deemed unsafe, it is flagged and filtered out. The Code Shield feature also precisely scans for and mitigates insecure code patterns, leveraging advanced AI techniques to identify potential vulnerabilities.

Implementing Guardrails

Developers can leverage pre-built functions and customizable settings within the Llama 3 framework to implement guardrails. For instance, using the transformers library, developers can set specific parameters to control the generation process:

from transformers import pipeline

import torch

model_id = "meta-llama/Meta-Llama-3-8B-Instruct"

pipe = pipeline(

"text-generation",

model=model_id,

model_kwargs={"torch_dtype": torch.bfloat16},

device="cuda",

)

messages = [

{"role": "system", "content": "You are a pirate chatbot who always responds in pirate speak!"},

{"role": "user", "content": "Who are you?"},

]

terminators = [

pipe.tokenizer.eos_token_id,

pipe.tokenizer.convert_tokens_to_ids("<|eot_id|>")

]

outputs = pipe(

messages,

max_new_tokens=256,

eos_token_id=terminators,

do_sample=True,

temperature=0.6,

top_p=0.9,

)

assistant_response = outputs[0]["generated_text"][-1]["content"]

print(assistant_response)

Further reading and resources

To delve deeper into the technical aspects and implications of AI guardrails, here are some recommended resources:

- Meta’s official documentation,

- reporting issues with the model,

- reporting risky content generated by the model.

How can we help?

Guardrails are essential for Llama and other models tailored to your organization’s specific needs and security measures.

Key points covered include:

- An overview of what AI guardrails are and why they are crucial for maintaining AI systems’ integrity and ethical use.

- Practical steps for integrating robust guardrails into Llama 3, ensuring it aligns with your organization’s ethical standards and security protocols.

- Strategies for applying similar safety measures to other AI models within your organization, emphasizing the importance of a consistent approach to AI security.

- How to align AI guardrails with your existing security policies to create a cohesive and comprehensive security strategy.

By prioritizing the implementation of these safeguards, we can help ensure that AI technologies are used responsibly and securely, reflecting your company’s values and security measures.

Conclusion

Guardrails in Llama 3 represent a significant step forward in ensuring AI’s safe and ethical use. As these technologies continue to evolve, the importance of robust safeguards cannot be overstated. By understanding and implementing these guardrails, we can harness the power of AI while minimizing its risks, making it a valuable tool for developers and users.

Feel free to share your thoughts and experiences with Llama 3 in the comments below. Let’s continue the conversation about the future of safe and ethical AI!

Leave a comment