In the last decade, the application of artificial intelligence in our daily lives has dramatically increased. Year after year, more advanced AI tools are being built that are revolutionizing how we do everything, primarily how we work. AI tools can be considered as having an extra pair of hands, which can help humans to perform challenging tasks more efficiently. One tool that has gained a large amount of popularity within the programming community is GitHub Copilot.

This article will help to explain what is GitHub Copilot, how it works, and what problems can be found in this tool.

What is GitHub Copilot?

GitHub Copilot is an AI system developed by OpenAI Codex that acts as your pair programmer. Pair programming is an agile technique where two programmers work together to build software. One programmer writes the code, who is referred to as the driver, while the other programmer observes and reviews the code as it is being created, and is also referred to as the navigator. It supports multiple programming languages and does very well with:

- Python,

- JavaScript,

- TypeScript,

- Ruby,

- Java,

- and Go.

Code Faster

As programmers, we often get “coder’s block”, which is when we get stuck trying to come up with the best solution to implement, or we know what we want to implement but we forget the syntax and we have to endlessly search Google. With GitHub Copilot, your programming partner is the AI system, which functions as a plugin in your IDE, and aims to help you code faster by analyzing the context of the code that you are working on and suggesting entire lines of code or even functions.

Better Tests, Better Code

Every programmer knows very well that testing code is absolutely essential to make sure software programs work properly. Unfortunately, this can be a very daunting task, which can lead to low-quality tests with a low-test coverage percentage.

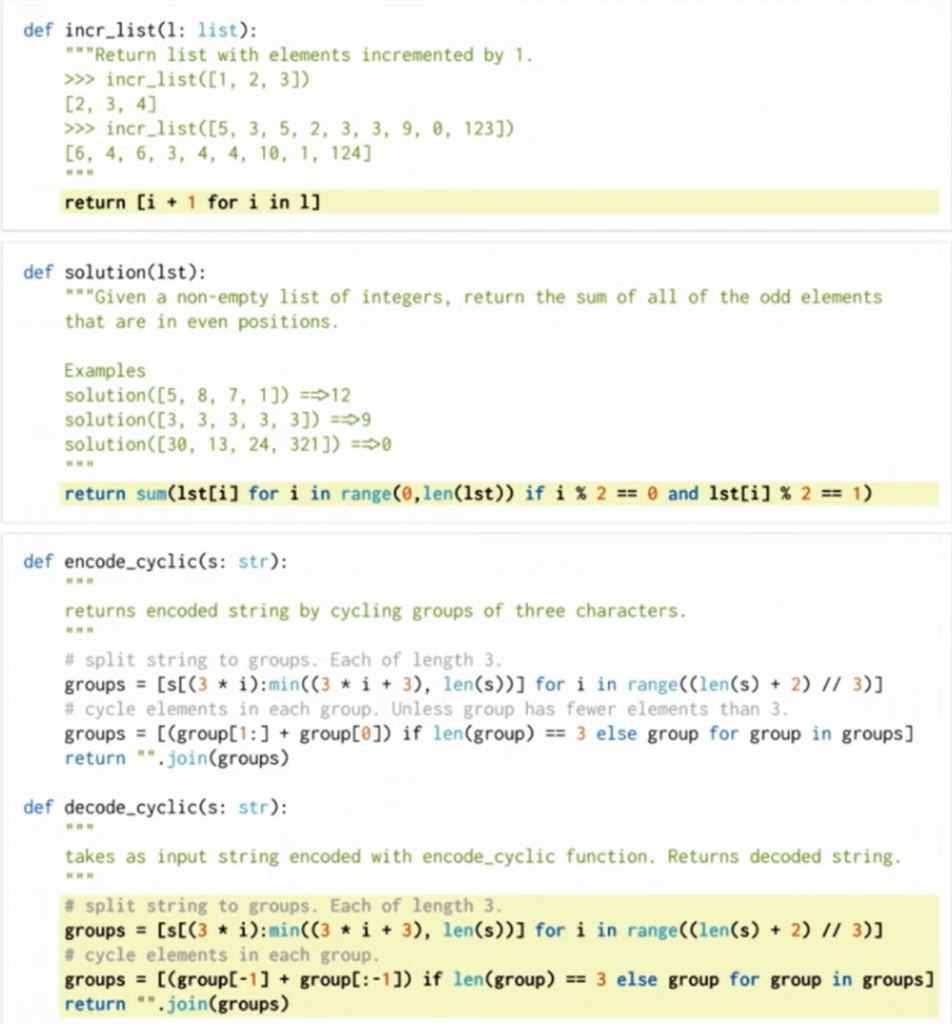

Luckily, GitHub Copilot can help. By writing a few comments describing how the test should look like, Copilot can generate suggested code to use those best fits the description of the comments. This can also help to motivate programmers to write more readable and higher quality comments so that not only Copilot understands the intention of the code, but also any programmers that may analyze the code in the future.

Additionally, by writing a few tests, Copilot can predict what other types of tests could potentially be needed and generate that code for you as well.

How does GitHub Copilot work?

GitHub Copilot is available in the form of a plugin/extension for most IDEs (e.g., Visual Studio Code). Once installed, the user needs to start writing code and/or provide detailed comments with a description of the desired functionalities of the code. The plugin analyzes the information written in the IDE and sends it to the GitHub Copilot service.

The service then connects with the OpenAI Codex Model to generate the suggested code based on the obtained information. The model analyzes open-source codes from thousands of GitHub repositories and tries to find the best possible code related to the obtained information and sends the best results back to the user.

Under the Hood

OpenAI Is best known for creating the GPT-3 AI model, a language model that uses deep learning to produce human-like text, which has over 175 billion parameters for language processing.

A language model is the probability distribution over a sequence of words. In the simplest form, it consists of predicting what will be the next word based on a series of words. These types of models require a very large dataset to train on such as the entire Wikipedia website, various books, articles, etc. The model would then learn to encode the meanings of the words and longer text passages by trying to complete missing words in a sentence.

Initially, there were ideas to use the actual GPT-3 model to complete code based on plain-English input, however, it did not get great results. GPT-3 is more of a general-purpose model that mainly tries to complete text, not code. When trying to generate code with GTP-3, the model returned code that was not indented, was missing special characters such as brackets and semicolons and required modification.

Instead, OpenAI built a new model, called Codex, that was trained in a very similar way like GPT-3, but specifically using only coding data rather than large corpuses of text. Codex has up to 12 billion parameters and has been pre-trained on millions of lines of public code on GitHub, which is about 159 GB of data. The model had been additionally fine-tuned on interview-style problems (e.g., LeetCode).

To evaluate the model, a custom dataset called HumanEval was made with 164 different programming problems, each containing about 8 test cases. The programming problems consist of a function signature, a docstring, and a body for the ground truth. The dataset can be accessed at GitHub.

These results would be then compared to other large language models such as the previous versions of GPT, GPT-Neo, and Tabnine. More detailed information about the training process and how the model was created can be found in the research paper: Evaluating Large Language Models Trained on Code.

How good is GitHub Copilot?

The technology has a lot of potential. Nat Friedman, the former CEO of Github, states,

Copilot helps developers to quickly discover alternative ways to solve problems, write tests, and explore new APIs without having to tediously tailor a search for answers on sites like Stack Overflow and across the internet.

There have been many examples where programmers showed how with the help of Copilot, they were able to write complete programs from scratch, effortlessly.

Does this mean that Copilot can write perfect code? Not exactly. GitHub Copilot tries to understand the intent of the user trying to write the code and tries to generate the best code it can, but the code that it suggests does not always mean that it will work or even make sense.

According to OpenAI’s paper, Codex, the model that runs Copilot, only gives the correct answer 29% of the time. The code that Copilot generates is generally poorly refactored and fails to take full advantage of existing solutions. The reason why it generates poor code is because of how language models work.

The model has been trained on millions of lines of public code on GitHub. Most code on GitHub is pretty old (based on software standards) and generally written by average programmers. Copilot generates the best code based on what previous programmers would have written if they were writing the same file as the current user. The model does not have any sense of what’s correct and what’s good. OpenAI talks about this in their Codex paper:

As with other large language models trained on a next-token prediction objective, Codex will generate code that is as similar as possible to its training distribution. One consequence of this is that such models may do things that are unhelpful for the user.

Additionally, there is a 1% chance that the model can suggest small snippets of code that came directly from trained code on GitHub. This can potentially lead to legal troubles, especially if the code violates any licenses.

However, the algorithm is constantly improving by recording whether each suggestion that has been generated by Copilot is accepted or not. In order to make the most out of Copilot, it is suggested to divide the code into smaller functions and provide meaningful function names, parameters, and docstrings.

Summary

GitHub Copilot helps to improve the work of every programmer by suggesting new lines of code, autocompleting code snippets, and even writing entire functions based on detailed descriptions as comments. This can definitely help programmers be more productive by reducing the small manual and repetitive tasks and allowing them to focus on what is more important.

The technology is not perfect, as the programmer needs to review and test the code that is being suggested, just like writing any other code. However, as the model continues to learn and improve year after year, it will be interesting to see how this model will perform in the future.

***

If you are interested in the subject of Artificial Intelligence, check out other articles from our experts: RPA and intelligent document processing, (PL) Jak AI pomaga w hiperpersonalizacji?.

Leave a comment