This article shares the experience collected in constructing a PoC of a semantic search engine for Polish courts’ rulings. The project’s goal was to determine possible gains over a classical, lexical-search solution that our customer used to work with daily.

The most challenging parts were to deal with a highly specific language of the documents and to build a text-similarity machine learning model without an annotated dataset.

Lexical search methods

Lexical search methods are word frequency-based approaches, what means a set of documents is ranked according to the query’s term frequency regardless of their proximity and context within the document. Hence it may produce many false matches. Let’s assume a user is looking for: a park in Barcelona worth visiting. The method will probably return the correct document containing Park Guel in Barcelona is a place everyone must see. Still, it may as well answer with Taxis Park near the stadium of FC Barcelona. Both documents are equally good from the algorithm’s perspective because both include the terms park and Barcelona.

However, only the first one corresponds to the actual intention of the user. Although it’s an artificial example, it clearly states the limitation of lexical search.

Modern semantic approaches aspire to outperform lexical methods and promise to capture the real intention and context of search queries. Unlike lexical techniques, they don’t rely solely on keyword matching but exploit deep neural networks trained on massive corpora and adapted for text-similarity tasks. Hence, they are expected to produce more accurate results.

How To Capture Text Similarity?

Transformer-based models reached human-level performance in many challenging text processing tasks. Mainly BERT-based (Devling et al., 2018) models have been widely applied thanks to their finetuning capabilities. As they are pre-trained on massive corpora, one could expect them to immediately capture the concept of text similarity and produce text embeddings directly applicable for similarity tasks.

Unfortunately, it appears that “raw” text embeddings are outperformed even by a much simpler models like GloVe (Penningtion et al., 2014) vectors averaged over all words! Luckily, further supervised fine-tuning may help to improve these disappointing results.

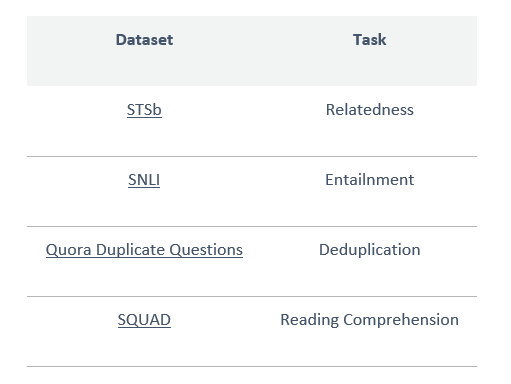

There are plenty of annotated datasets (at least for English) that may help to fine-tune a pretrained model to a text-similarity task, but they may vary in their interpretation of text similarity. Some consider text similarity as a textual entailment, where the truth from a given sentence needs to follow the fact from the other.

Alternatively, textual agreement might be expressed in terms of reading comprehension, where the task is to determine if a given text fragment answers the stated question. Another idea is to consider similarity as a specific duplication problem. The table below presents some of the available datasets and corresponding similarity tasks.

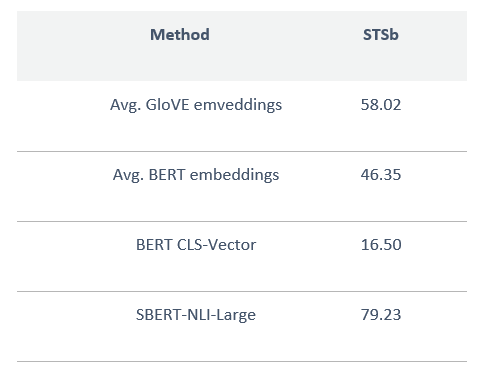

In the literature, text similarity models are often compared with the Semantic Text Similiarity Benchmark. It defines similarity as relatedness: a score from 1 to 5, reflecting how much two sentences are related to each other in terms of their semantic, syntactic, and lexical properties. The model performance is expressed as a correlation coefficient between predictions and ground truths.

The following table presents the performance of GloVE, BERT, and SBERT (a model trained for text-similarity) on the STS benchmark. It’s easy to notice that SBERT overwhelms the “raw” approaches, which proves the need for task-specific adaptation. It also shows that CLS-vectors are not the best choice…

Lack of Annotated Data

Some language domains are significantly different from the everyday language. Documents created by lawyers, scientists, pharmacists utilize distinctive vocabulary and contain lengthy and detailed sentences. Hence to capture the nuances of such domain-specific text-similarity, one would require a domain-specific dataset. Unfortunately, such appears rarely, especially for less common languages like Polish.

Therefore, one of the most significant challenges in our project was the lack of annotated dataset for our problem of text-similarity. Ultimately, we managed to get 450 queries/answer pairs that we could use for testing purposes and better understand the requirements, but we had to turn into unsupervised approaches for training.

Domain Adaptation

At this point, one may ask, why not use general-purpose language models adapted to text-similarity tasks, e.g., using HerBERT (Mroczkowski et al., 2021) (BERT for Polish) finetuned on the STSb dataset for Polish. It’d work indeed, however as presented by Gururangan et al. (2020), adapting models to a particular domain leads to significant performance gains. Moreover Wang et al. (2021) show that unsupervised approaches trained on the domain data outperform supervised generic models.

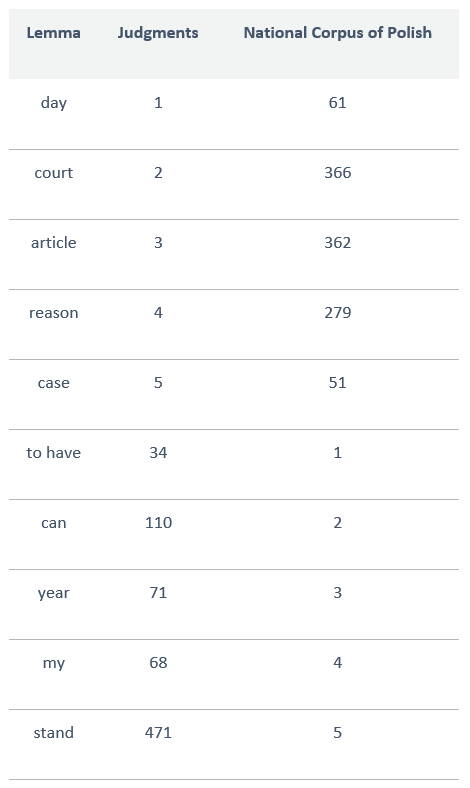

Those findings were especially interesting, as the domain language of our problem was meaningfully different from everyday Polish. While performing the initial data analysis, we extracted the 10K most frequent lemmas from the judgment documents and compared them with the 10K most frequent lemmas from the National Corpus of Polish (excluding stop words). We determined there is just 34% of overlap between those two sets!

The Table 3. presents the top 5 lemmas from the judgment’s corpus and National Corpus of Polish their respective position in the other dataset. Moreover, we’ve also noticed the judgments’ sentences are significantly longer than everyday Polish, and more frequently written in the passive voice.

Thus, considering the specificity of our domain’s language and the effectiveness of unsupervised approaches shown in the aforementioned papers, we’ve decided to turn our attention to unsupervised methods. Moreover, we had access to 350 000 courts’ rulings making 8GB large corpus.

Unsupervised Semantic Search

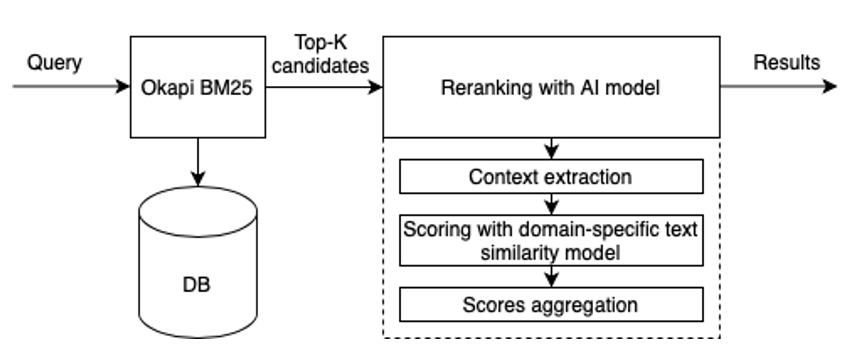

Building a semantic search engine is about constructing an efficient model for text similarity and scaling the solution to perform an effective search among thousands or millions of documents. Therefore, there is a need to make the right architectural decisions. We dealt with roughly 350 000 documents, which split into text passages led to 16 million of data points. Thus, we decided to apply a typical retrieve-rerank pattern often used in such systems. It means a two-step solution made of a quick but not very accurate method to retrieve the list of potential matches and a precise but slow technique to re-rank the retrieved candidates.

We decided to rely on the Okapi BM25 algorithm from Elasticsearch with spaCy Polish lemmatizer for the retrieve part and then rerank the top-K candidates with the semantic similarity model.

Unfortunately, none of the described unsupervised methods could be directly applied to our task as we dealt with a highly asymmetric search problem. The models presented in this article were designed to work with sentences of similar (symmetric) length, and in such circumstances, they perform well. However, they provide disappointing results once the queries are significantly shorter than the answers (asymmetric). While collecting the requirements, we noticed that the users form short non-factoid questions and expect explanatory answers often span around several sentences.

We experimentally determined that a single query made of several words requires a text passage of a hundred words on average to answer it. Hence, we had to mitigate the query/answers asymmetry length somehow to make the models working.

Our approach might be summarized as replacing the BM25 scores with semantic scores computed for the retrieved text passages. We designed a method that calculates semantic scores of the terms found by BM25 based on their context with respect to the query and aggregates such partial results to score the entire text passage. We used BERT trained on our 8GB corpus as the MLM, and we finetuned it for text similarity using STSb translated to Polish. However, instead of using the model to construct the embeddings, we predict the similarity score directly given the query and the contexts of found terms. The whole solution was deployed on Microsoft Azure.

The results

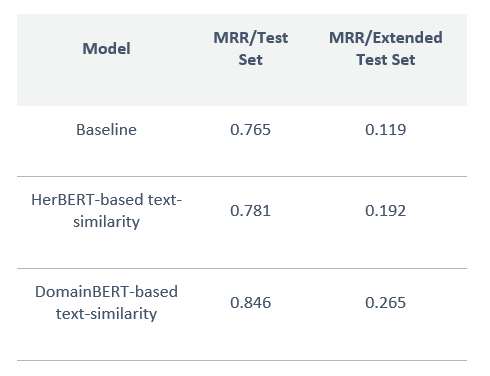

To evaluate the solution’s performance, we relied on the MRR (Mean Reciprocal Rank) metric, which is widely used in evaluating information retrieval systems. At first, we focused on the test set of 450 documents and noticed a significant improvement provided by the semantic approach, especially when using the semantic similarity model pretrained on the domain-specific data.

Next, we extended the test set with all documents from the courts’ rulings corpus what made the search task more difficult as we expected to identify 450 documents from the test set among other 350 000 records. In such conditions, the semantic search presented its robustness once again and appeared to be twice as good as the previous lexical system used by the client (baseline). What might be worrying are low MRR values for this experiment.

However, this might be explained because some of the 350 000 documents were better matches for the constructed queries but were simply not labeled. Meanwhile, the MRR was calculated only on the 450 labeled documents. Manual verification of the results confirmed this thesis.

I believe that the presented MRR values don’t correspond to the real performance measure of the system, as the test set was too small and could be biased. Secondly, the extended test set was not fully annotated. However, in each case, the relative comparison of different methods shows the robustness of the semantic approach. What’s most important from the business perspective is the utterly positive feedback from our customer after one month of using the elaborated solution.

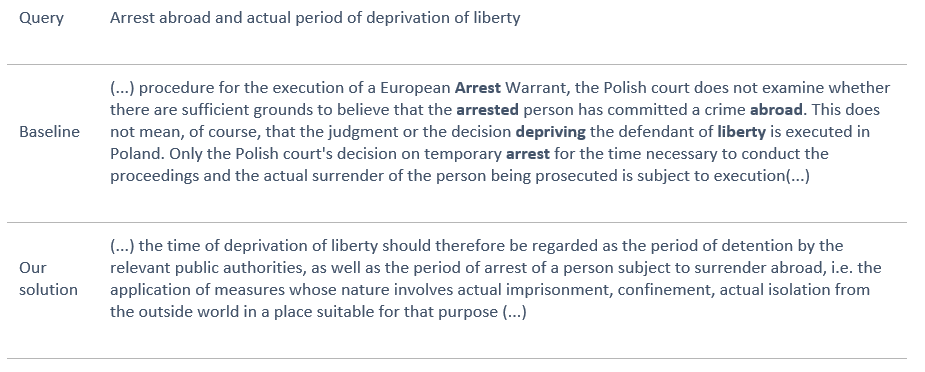

For the lexical approach, the found keywords are in bold. The results were truncated for readability. I’m not a lawyer, so I’m not sure if the previous system’s result is correct. However, I have no doubts about the correctness of the second result. The text was translated from Polish with the help of Deepl.

Conclusions

Adapting pretrained masked language models to particular domains and then further finetuning on a text-similarity auxiliary dataset seems to yield surprisingly good results. Even though one can’t directly use such methods in scenarios where the length of the query is much shorter than the length of the answer, it’s relatively easy to construct an algorithm leveraging the text-similarity models and handling such asymmetric cases.

Thanks to pragmatic architectural decisions and thoughtful research of the existing unsupervised text-similarity methods, we proved that domain-specific semantic search produces way better results than lexical approaches.

Leave a comment