For a long time, the focus of companies has been on data.

Professional entities don’t want to make decisions based on opinions or hunches, instead, they are guided by facts. It doesn’t matter whether we’re talking about making strategic decisions, whether we want to check sales performance, or maybe confirm the address of one of our contractors – both small and large decisions need data to be able to take them consciously.

Without data, you are just another person with an opinion

W.E.Deming

Why Modern Data Warehouses were created?

In recent years we have seen a significant increase in data volumes, sources, formats and frequency of delivery. Data warehouses have stopped relying solely on structured data sources, but have increasingly begun to store and use semi- and unstructured data, such as logs or bitmaps, for data processing and enrichment and as a source for machine learning models.

As a result, the standard data warehouse model, customized to store and analyze structured data, was no longer sufficient and could not respond to the growing demand for data consumption and storage.

At the same time, the trend in which more services are being moved from on-premises to the cloud was increasingly evident, and in this regard, databases are no exception. The development of the cloud has also brought technologies that separate storage from computing power, which in turn has made it possible to create solutions that are both cost- and performance-efficient.

For the above reasons, an architecture called Modern Data Warehouse (MDW) emerged. It incorporates a traditional data warehouse, provides the ability to work with ML and data science models directly on files from source systems, and allows the creation of advanced reports for business users.

In this post, I will try to introduce the implementation of the Modern Data Warehouse architecture in the Azure cloud and discuss its various components.

The implementation of the Modern Data Warehouse architecture in the Azure cloud

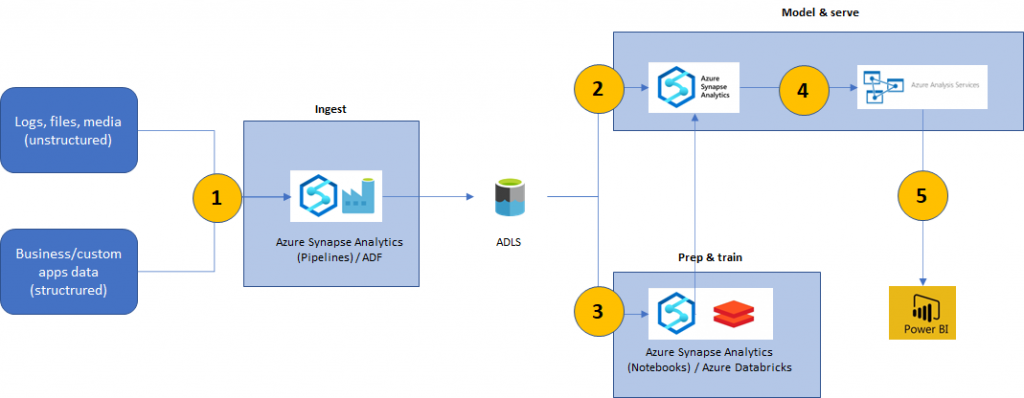

Below is a sample diagram showing the implementation of the architecture with the data flows between components highlighted:

First step – data storage in Azure Data Lakes

The entry point is structured data, i.e. data organized in tabular form that has a specific structure and is consistent in terms of the type of data stored, coming from applications and business systems, and unstructured data, i.e. data that does not have the aforementioned structure, such as logs, text files or bitmaps. They can reside in systems or servers located in the cloud or on-premises.

Using Pipelines functionality from either Azure Synapse Analytics or Azure Data Factory, we can create processes that download files to Azure Data Lake, where all source files will be stored. Both Azure Data Factory and Pipelines in Azure Synapse Analytics have built-in functionality to run specific processes according to a specific schedule or in response to specific events, such as creating a file on another data lake.

Some applications or business systems have their own processes that can export files to a specific location in the cloud. On the one hand, this simplifies the whole process, while on the other hand, the security and access issues that these applications should then have must also be taken into consideration.

Azure Data Lake is an Azure cloud file storage service designed for the scalability and efficiency of big data processing. Data can be stored in any size and format. The introduction of file system features helps speed up file reading and metadata management, as well as managing file access at the individual folder level. Thanks to built-in rules, it is possible to run data processing pipelines (steps 2. and 3.) immediately after the fact of writing a file to a given storage account.

Second step – moving data from the data lake to the staging table

Data that has been stored on Azure Data Lake and is to be loaded into the SQL pool in Azure Synapse Analytics must first be moved into so-called staging tables. Their job is to store the current version of the data model and prepare it before loading it into the target tables.

A common practice is to prepare a staging table in having an identical structure to the target table, and then compare the contents of the staging table with the currently held data in the database. Depending on the scenario, the data can be added as new rows to the target tables, used to update existing rows or used to identify rows to be deleted.

To move data from the data lake to the staging table in the SQL pool in Azure Synapse Analytics in the fastest and most efficient way, it is recommended to use PolyBase technology. This is a technology that allows you to access and query external data, such as that stored in the Azure Data Lake service, using T-SQL. As an alternative to Polybase, data can be copied via properly configured pipelines in Azure Data Factory or Azure Synapse Analytics.

Once the data is stored in the staging table, running the appropriate stored procedures allows the data to be moved to the target tables. In this step, the data can undergo various transformations to match the model definitions in the target tables.

Third step – using data in the train ML models

Not all data needs to be transferred directly to the database, some of it can be used as input for processes that train ML models. These processes will be run through the Notebooks functionality in Azure Synapse Analytics or Azure Databricks. It should be noted that these are mostly complex compute-intensive processes and therefore dedicated clusters running on Apache Spark technology will be used to support them.

The results from the trained models will be saved to staging tables in the SQL pool in Azure Synapse Analytics, from where, after running the appropriate procedures, the data can be transferred directly to the target tables or used for data transformation.

Fourth step – calculating data models

The data collected in the target tables will be the basis for calculating data models defined in Azure Analysis Services (AAS).

AAS is a tool for modeling data by defining relationships between data entities, creating measures, dimensions, hierarchies, and security features within a tabular data model. Since AAS recommends defining data models according to Kimball’s methodology, it is recommended to design the database according to its guidelines, which will allow visualization tools to use the data effectively.

Fifth step – data analysis in Power BI

End users analyze aggregated data in Power BI, using defined dimensions and measures in data models, or create their own using the Data Analysis Expressions (DAX) language. Power BI has a wide range of visualization tools and allows users to connect to a variety of data sources located both in the cloud and on-prem, including text files, relational and non-relational databases or even streaming data sources, among others.

Advantages of the Modern Data Warehouse solution

What are the benefits of implementing MDW? First of all, we get an efficient solution that can deal with the huge amount of data flowing in every day. The architecture used is fully scalable, which makes it possible to adjust the computing power to the current demand.

The solutions used to make it possible to combine data coming from different sources and in different formats, and on their basis create advanced visualizations and reports that allow users to grasp relevant information and draw conclusions from the collected data.

The individual components of the solution can be configured in such a way as to ensure high availability of the whole even in the event of failure of a single component, and built-in disaster recovery mechanisms will help protect against accidental data loss.

Other possibilities

The above scheme is indicative only and may be subject to modification. For smaller databases (smaller than 1 TB), it will make more sense to use, for example, Azure SQL Database or Azure Database for PostgreSQL, as most likely due to the size of the data, we will not be able to take full advantage of all the MPP capabilities offered by the SQL pool in Azure Synapse Analytics.

While Azure Analysis Services is an excellent tool for big data analysis, it is also relatively expensive. For this reason, it is also an element that is often overlooked for implementation and replaced by directly querying databases or creating models directly in Power BI. Power BI itself can also be replaced by another visualization tool, such as Qlik or Tableau.

In this case, connectivity to particular types of data sources or individual user preferences plays a big role.

***

If you are interested in the subject of data warehousing, I recommend another article (PL) by our expert: Architektura Lakehouse, koncepcja Delta Lake w usłudze DataBricks.

Leave a comment